Chapter 1: Learn how pervasive consumer concerns about data privacy, unethical ad-driven business models, and the imbalance of power in digital interactions highlight the need for trust-building through transparency and regulation.

1. Pervasive Consumer Concerns

Content in this chapter

With its mere existence, every individual leaves a trace of information. This principle is even more of relevance in the digital world. It is obvious that businesses take advantage of this development by adapting and further evolving their business model: Targeted advertising has become the primary business model in the digital economy. No other business model is – yet − better suited to turn data as commodity into revenue.

Consumers know already that their personal data is precious. Whenever digital giants like Google and Facebook publish their impressively increasing revenues, their customer are left with the unpleasant feeling, that this success is built on their back without adequate compensation. This imbalance is likely to worsen with the inevitable and exponential growth of data.

While the collection and monetization of user data has become the primary revenue source, neither privacy-enhancing technologies nor their regulations have kept up with user needs and privacy preferences. In order to get access to almost essential, often “free” online services such as search or online social networking, users are forced to blindly trust in fair and lawful.

On the other hand, digital communication has empowered customers. They are more connected, more tech-savvy and better informed. This results in a more illusive customer base that is characterized by decreasing band loyalty. Marketing strategies clearly have to adapt to this situation to remain effective. It is inevitable that the digital economy and its data driven business models have to get on their way towards truly trust-based customer relationships.

Explosive growth of data

The digital economy is empowered and in turn causes an explosive growth of data. Bit it’s not the incomprehensible amount of data available that will impact our society. The real impetus is the potential insights companies, individuals or governments derive from this new, vast, and growing natural resource.

As shown in the below figure, interesting moments arise when different growth lines intersect. According to Moore’s law – which is still valid in 2015 – processing power doubles every 18 months. This is not only the reason why companies tend to present a new, fancy and more powerful laptop right after you bought yours. It allows to actually looking into data. The amount of available data grows at a faster pace than processing power. In the past, there was not enough data available to effectively evaluate this information and to take good decisions. Today’s processing power combined with new data storage and distributed paradigms (such as Hadoop) allow for evidence-based decision making.

The other side of the coin is that we now have to decide what data is worth analyzing and last but not least which questions shall be asked. This restriction can be described as a flashlight effect. Companies or the government will only be able to see in the direction their analytics engines are pointed to.

Furthermore, increasing processing power and growth of data will lead to an even steeper curve of growing system complexity. Increasing system complexity requires more decentralized control mechanisms (Helbing, 2015). Today’s digital economy shows characteristics of monopoly markets. Google and Facebook lead primarily through top-down control. Maybe the change of governance structure at Google with the creation of the Alphabet holding organization is a first move in the inevitable direction towards decentralized and eventually self-regulating systems.

Digital transformation is a long-winded, ongoing process. So far, results of this process are often rather unspectacular. This is about to change with the dynamic described above.

The digital revolution is about to transform industries (again). The main focus of our economy’s activity is about to shift towards the information sector. Drawing on the Three-sector theory, a significant part of the workforce is moving from the secondary (manufacturing) as well as the tertiary (services) sector to a quaternary (knowledge-based part of the economy) sector. This actually means, that a lot of today’s jobs – in particular the more repetitive, procedure based tasks – will be done by computers. Not only employees and companies need to adapt quickly to this new situation, but also governments and their regulatory frameworks. They need to prepare for the shift to the quaternary sector. In order to be able to harness the potential of the digital revolution, the economy requires new infrastructure and rules. Confidence in a strong regulatory framework regarding data privacy is one of the key requirements. Trust would be a fundamental driver for self-organisation in our society.

The most popular business model in the digital economy is tailored advertising. The enormous amounts of data individuals leave behind when using online services allows for profiling and targeting, highly effective advertisements. It is incomprehensibly common that customers have their data arbitrarily mined by a “free” service for the purposes of ad targeting. Thereby, the true value of personal data used is often neither considered nor compensated.

Consumers are more and more aware of the value of their personal data. In addition, they realize that disclosing such data is associated with diverse risks (please refer to section “Privacy at Risk” for more details). It is quite disturbing that most of the users are not aware of how companies are collecting data and what the precious information is used for (please refer to statistics at the and of the chapter). This would require fair and transparent information practices. Instead, users are often facing insufficient conditions for privacy protection and they are forced to blindly trust in fair and lawful processing of their data.

Users are usually not given any options other than accepting the terms and condition in order to get access to the desired service. The alternative would be the cost of no service. In addition, it is no secret that individuals often access sites and use services without reading these terms and conditions (picture source: Tv documentation tacma.net, 2013). This situation does not comply with the requirement of informed consent. The principle of consent is widely seen as a key mechanism for building trusted relationships and eventually enabling user-centric data management.

Whilst in earlier times control over personal data may have been undertaken by preventing the data from being disclosed, in an internet enabled society it is increasingly important to understand how disclosed data is being used and reused and what can be done to control this further use and reuse (Whitley 2009, 155)

This “balancing act” of competing business interests and privacy requirements will be of increasing importance in the near future. Companies across industries have started to collect vast amount of user data. Some businesses have even started to analyse this data and act on it. However, actions beyond targeted advertising come with a tremendously serious reputation risk. Just think of your personal perception of the trustworthiness of brands like Google and Apple. Apple seams to have less appetite for your private data compared to Google – who summarized its code of conduct with the words “Don’t be evil”. Is this balance of subjective perception likely to change with apple’s data driven concepts such as HealthKit?

Today’s add-centric business models will further evolve and will keep on creating interesting innovations. Recent, noteworthy innovations all have in common that they replace human-based methods and that they rely heavily on user data:

Probably to most controversial discussion is held around the world-famous like button from Facebook. Mark Zuckerberg has rightly praised this button as its most iconic feature and one of their most important innovations. Introduced in February 2009, the like button is clicked more than 3.2 billion times each day. However, it is not the widespread use of this icon that raises privacy concern. It’s the fact that the button – regardless of the page where it sits – calls home and sells out users’ privacy even if they never press it. That makes the like button an innovation that actually breaches most of the data protection regulations in Switzerland and Europe.

Shared endorsements represent a very most popular form of targeted advertising. Social networking platform such as Facebook and Google pull the users likes, +1s, and reviews (e.g. from Google Maps and GooglePlay) and turn them into advertisements for your connections. Ads often display the users profile picture to increase the effect of a personal recommendation. This form of advertising is highly questionable because the user doesn’t know when and how these endorsements are actually displayed. By understanding and agreeing to the terms and conditions, users only know that this can and will happen sometimes and somehow. Use these links to personally opt-out of shared endorsements on Google and Facebook.

Another improvement in online advertising technology is Programmatic Buying. This concept automates current online advertising processes. It goes beyond Real-Time Bidding that allows selling advertising spots to the highest offer in an auction system. With Real-Time Bidding, a buyer can set and adjust parameters such as bid price and network reach. A programmatic buy will layer these parameters with behavioral or audience data all within the same platform (read more). This innovation will increase the importance of ad-networks and therefore intensify data tracking efforts.

Advanced direct marketing technology allows to serve adverts and all other messages individually. Communication is becoming ever more targeted. If an initial attemt to deliver a message is not successfull, companies will have another try by re-targeting the same individual on the next occasion. Consumers will only receive adverts that are being nuanced in order to reflect the way they see the world. This set of innovations in combination with advanced analytics will have unwanted side-effects. They can lead to a certain filter-bubble (users become separated from information that disagrees with their viewpoints, effectively isolating them in their own cultural or ideological bubbles) and even help questionable political candidates to will elections.

Today’s add-centric business models with its mechanisms for behavioral tracking and aggregation of personal data result in asteady erosion of privacy and therefore provoke pervasive customer concerns. It is mandatory to critically rethink digital business models and to eventually include a realistic value proposition for the use of personal data. Personal data will continue to be recognized as a source of macro-economic growth. Therefore, it is critical to find solutions that can both protect privacy and unlock value.

The described innovations in digital marketing indicate that privacy of online users is at risk. In fact, the word privacy in combination with online user activity is misleading. There is no such thing as true Internet anonymity and therefore, true privacy is a myth as well. A good understanding of current online analytics practices and their direction of development is required to understand the extent of risk an Internet user takes when participating in online transactions.

Analytics can be described as the practice of capturing, managing and analysing data to drive business strategy and performance. Businesses have developed very sophisticated approaches and solutions to turn data into insights and eventually to make smarter decision. On a lower maturity level, analytics capabilities deliver more descriptive results. This is the domain of traditional management reporting. More insights are generated trough classic ex-post analysis. The focus on these deeper levels of maturity lies on understanding mainly structured data. Processing and analysis of data in real-time allow for effective monitoring. This maturity level is already widely adapted by many online businesses. It allows for example to listen to conversations on social media “as it happens”. The highest maturity level of analytics describes practices that look ahead. Predictive analytics draws on simulation and modeling as well as sophisticated optimization algorithms.

The obvious fact that analytics capabilities become more and more powerful underlines the hypothesis that true privacy on the Internet doesn’t exist – any more. Even if a service provider guarantees privacy, users bear a certain risk that their personal data are mined and brought in context with their identity at a later stage. Modern analytics may inadvertently make it possible to re-identify individuals over large data sets. The numbers of data points that can be used to build a rich profile of users are countless. An insurance company can for example monitor the characteristics of a prospect’s keyboard usage (speed of typing and moments of hesitations, typing pressure and usage of small caps versus uppercase), which can reveal relevant information. Hesitation shows limited decisiveness and therefore may give hints regarding a customer’s willingness to pay. Individuals can often be re-identified from anonymised data because their personal data (especially critical data such as geo-locations) often narrows possible combinations down and finally lead to the individual. Behavioral psychologists came a long way in analyzing and profiling users based on their digital traces.

An interesting personality test, which predicts a user’s personality based on its Facebook likes has been developed by a mixed team form Cambridge University and Stanford University. Michal Kosinski and his colleagues even claim that computers are more accurate than humans in judging others’ personality (Youyou et al. 2014). If you happen to own an active Facebook account, feel free to take this personality test yourself. The test uses a snapshot of your digital footprint to visualize how others perceive you online. You may take additional psychometric tests for self-perception to assess the accuracy of this test.

Online privacy is at risk. As a natural reaction to the described developments, consumer concerns regarding the provision of personal data are increasing. However, the motivation of online businesses to protect user’s privacy and to act as an advocate for the customers is often surprisingly low. It is usually limited to peruse objectives in marketing such as avoiding reputation risks. Businesses have to change their attitude in order to be successful in the future. Economists would label privacy a merit good. The market for merit goods is an example of an incomplete market. This is the reason why a strong regulatory framework is essential.

Customers face a diverse bouquet of risks regarding the use of their data. As we will learn drawing on the prospect theory in chapter 3 the user’s perception of these risk strongly influence their decision making online. While the probability of their occurrence is perceived as quite low, the potential impact of these privacy risks is grave. A quantification of perceives risk therefore depends on two major components: the impact of a certain transaction and the perceived uncertainty about the likelihood of occurrence of a transaction result (Plötner, 1995). Hence, trust is a function of risk and relevance. When facing a highly relevant interaction target, a user’s level of trust is supposed to rise with increasing risk (Koller, 1997). The goal of getting well lets a patient develop a high level ot trust towards a surgeon.

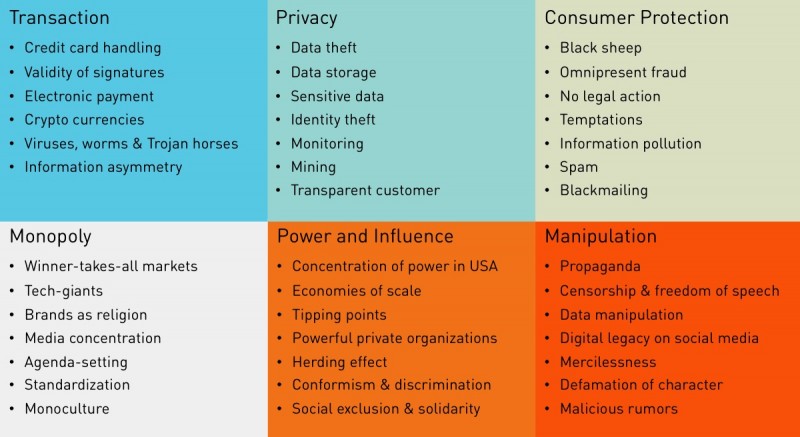

Next to a list of personal risks the discussion about the use of personal data also leads to diverse superordinate, often ethical risks. The groundbreaking success of electronic commerce and the emergence of disruptive technologies imply new types of risk.

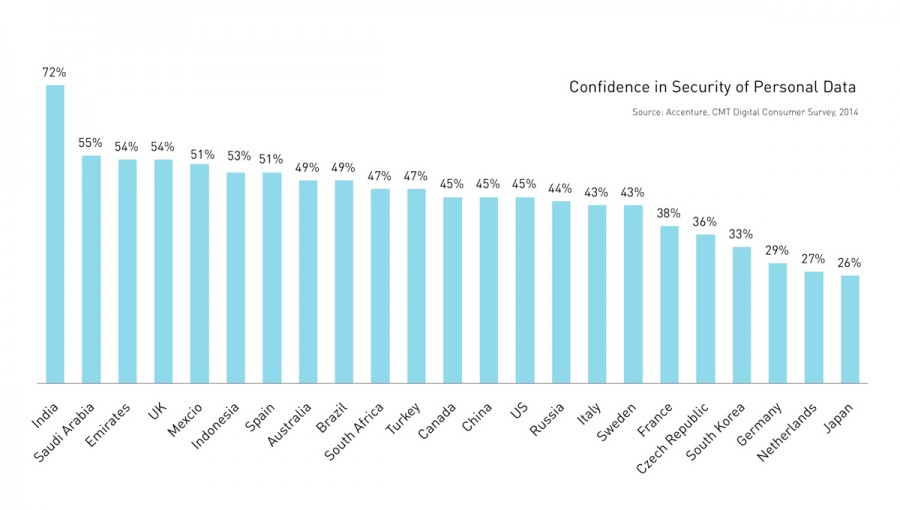

As we are going to learn in chapter 3 “Understanding Digital Trust”, the disposition to trust and therefore the acceptability of data use strongly depend on cognitive constructs that are individual to the trusting person. Interestingly, variances in these dispositions can also be observed on an aggregated level. Broad research identifies significant differences in the acceptability of data use and confidence in the security of personal data between countries. A recent study by Accenture shows that India is probably to country with the highest confidence in the use of personal data, whereas Japan is very reluctant in terms of building trust (Accenture, 2014). Other studies emphasize that these differences between cultures also heavily depend on the context of data usage (WEF, 2014). China and India are countries that tend to trust digital service providers more than USA or Europe – even without knowledge of data use, the service provider itself and the underlying value proposition.

Within the scope of a research project about big data and privacy issues, the White House solicited public input via a short web survey (Whitehouse, 2014). Although these results cannot be considered a statistically representative survey, it still shows the general attitudes about data privacy. Respondents expressed a surprising great deal of concern about big data practices. Most significant are strong feelings around ensuring that data practices are transparent and controlled trough legal standards and oversight.

The pie charts below indicate how many percent of the respondents are very much concerned about the particular aspect of data practice:

Legal standards & oversight

Transparency about data use

Data storage & security

Collection of location data

With all the outrageous disclosures about practices of the NSA and other governmental institutions, it is not surprising that the majority of respondents to the survey of the White House don’t trust intelligence as well as law enforcement agencies “not at all”. On the positive side, majorities were generally trusting of how professional practices (such as law and medial offices) and academic institutions use and handle data.

The progress bars indicate how many percent of the respondents don’t trust each entity at all:

These findings illustrate that businesses are asked to act. Privacy concerns are likely to increase with the economy’s ever-growing appetite for data. Building a trusted relationship with consumers will be key. In order to reach this goal, companies must provide a clear value proposition (please refer to details about the trust clue “reciprocity” in chapter 3). The digital economy has very good chances to eventually move closer to their customer base. The study from Accenture also shows that about two-thirds of consumers globally are willing to share additional personal data with digital service providers in exchange for additional services or discounts (Accenture, 2014). However, sharing personal data with third parties remains a critical pitfall for engendering trust.

Willingness to share additional personal data in exchange for additional services or discounts: