Chapter 1: Learn how pervasive consumer concerns about data privacy, unethical ad-driven business models, and the imbalance of power in digital interactions highlight the need for trust-building through transparency and regulation.

Chapter 8: Learn how AI’s rapid advancement and widespread adoption present both opportunities and challenges, requiring trust and ethical implementation for responsible deployment. Key concerns include privacy, accountability, transparency, bias, and regulatory adaptation, emphasizing the need for robust governance frameworks, explainable AI, and stakeholder trust to ensure AI’s positive societal impact.

The rapid advancement and widespread adoption of artificial intelligence (AI) marks a pivotal moment in human technological evolution. As a general-purpose technology, AI’s transformative impact extends across industries and deeply penetrates everyday life. Recent research by McKinsey & Company (2024) indicates that 72% of organizations have already implemented at least one AI technology, highlighting the technology’s pervasive influence in contemporary society.

As Levin (2024) articulates in his groundbreaking work on diverse intelligence, the fundamental nature of change and adaptation suggests that persistence in current forms is not only impossible but potentially undesirable. Levin’s research at Tufts University emphasizes AI’s potential role as a bridge toward understanding and developing diverse forms of intelligence, which could prove crucial for humanity’s future development. However, this rapid integration of AI technologies into societal frameworks brings significant trust and ethical implementation challenges. Holweg (2022) notes a concerning trend: as AI becomes more prevalent, instances of applications that violate established social norms and values have increased proportionally. This observation underscores the critical importance of developing robust frameworks for digital trust in AI systems.

Developing digital trust in AI systems requires a multi-faceted approach that considers technical reliability, ethical implications, and societal impact. This includes establishing transparent frameworks for AI development, implementing robust safety measures, and ensuring equitable access to AI benefits across society. Users and stakeholders should have access to clear explanations of how AI systems operate and make decisions. This can be achieved through techniques such as explainable AI (XAI), which aims to make AI decision-making processes interpretable to humans (Arrieta et al., 2020). The challenge lies not merely in advancing AI capabilities but in doing so in a manner that maintains and strengthens social trust while promoting responsible innovation.

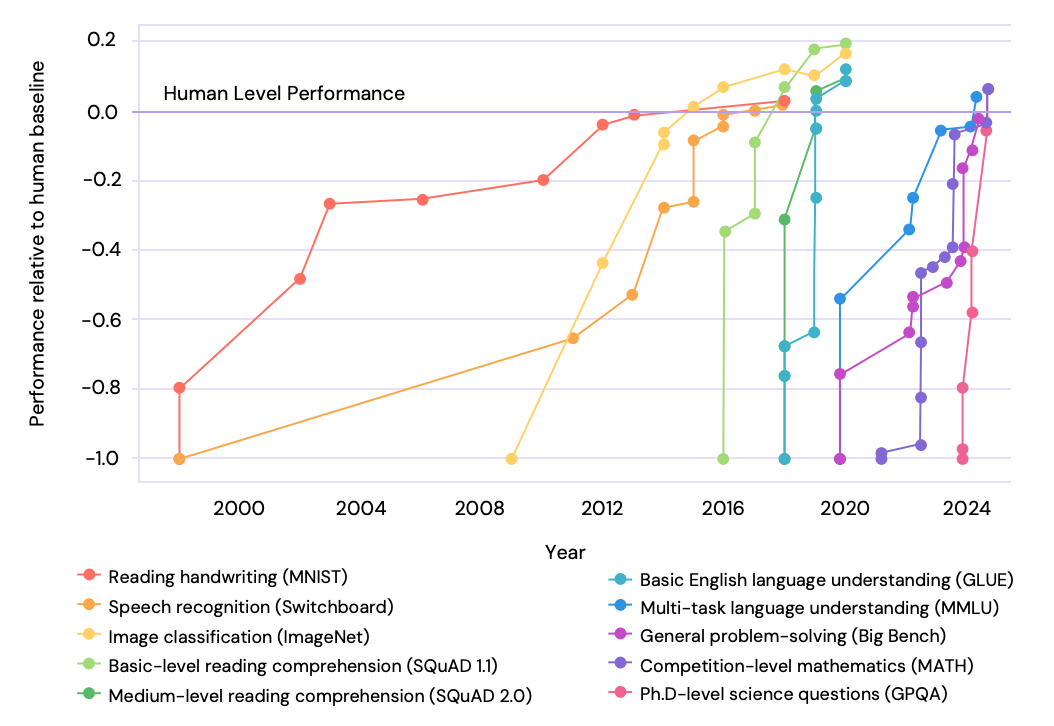

The performance trajectory of AI models provides both promise and pause for consideration. Recent comprehensive analyses (Bengio et al., 2024) demonstrate remarkable progress in AI capabilities across various benchmarks from 1998 to 2024. Particularly noteworthy is the rapid progression from relatively poor performance to surpassing human expert levels in specific domains. This acceleration in capabilities, while impressive, heightens the urgency of addressing trust-related concerns. As Hassabis (2022) emphasizes, AI’s beneficial or harmful impact, like that of any powerful technology, ultimately depends on societal implementation and governance. This perspective aligns with findings from Kiela et al. (2021), who documented significant improvements in AI performance across multiple benchmarks, including MNIST, Switchboard, ImageNet, and various natural language processing tasks.

The performance of AI models on various benchmarks has advanced rapidly. It is important to note that some earlier results used machine learning AI models that are not general-purpose models. On some recent benchmarks, models progressed within a short period from having poor performance to surpassing the performance of human subjects who are often experts (Bengio et al., 2024; Bengio et al., 2025). This rapid evolution from specialized systems to more general-purpose AI models marks a significant milestone in artificial intelligence development, warranting careful consideration of its implications.

The advancement of general-purpose AI systems faces multiple possible trajectories, from slow to extremely rapid progress, with expert opinions and evidence supporting various scenarios (Bengio et al., 2025). Recent improvements have been primarily driven by exponential increases in compute (4x/year), training data (2.5x/year), and energy usage (3x/year), alongside the adoption of more effective scaling approaches such as ‘chains of thought’ methodology. While it appears feasible for AI developers to continue exponentially increasing resources for training through 2026 (reaching 100x more training compute than 2023) and potentially through 2030 (reaching 10,000x more), bottlenecks in data, chip production, financial capital, and energy supply may make maintaining this pace infeasible after the 2020s. Policymakers face the dual challenge of monitoring these rapid advancements while developing adaptive risk management frameworks that can respond effectively to increasing capabilities and their associated risks.

1. New worlds, new problems

The emergence of AI agents introduces a new layer of complexity in how users navigate and establish trust in their digital interactions (Glinz, 2024). This complexity manifests through novel sub-systems that mediate user-AI interactions (Lukyanenko et al., 2022) and mechanisms that govern trust relationships between users and artificial agents (Lee & See, 2004; Tschopp & Ruef, 2020).

In response to these challenges, the iceberg.digital project aims to promote sustainable data access and develop trustworthy AI systems that users can confidently rely upon daily.

The Three Critical Pitfalls of Artificial Intelligence: Privacy, Explainability, and Algorithmic Bias

As AI continues to advance at an unprecedented pace, the regulatory framework struggles to keep pace with its associated risks, as acknowledged in the Bletchley Declaration. This comprehensive analysis examines three critical pitfalls that pose significant challenges to the responsible development and deployment of AI systems: privacy intrusion, lack of explainability, and algorithmic bias. Understanding these challenges is crucial for developing effective mitigation strategies and ensuring the responsible advancement of AI technology. Studies indicate that high perceived explainability significantly bolsters trust (Hamm et al., 2023).

.

Privacy Intrusion

Privacy, defined as “the state of being undisturbed by others and shielded from public exposure or attention” (Wehmeier, 2005), represents the most prevalent concern in AI implementation. According to Holweg et al. (2022), privacy breaches account for 50% of AI failure cases in their analysis of 106 controversial incidents.

The privacy challenge manifests through two distinct yet interconnected issues: consent for data usage and consent for specific purposes. Organizations often face the temptation of “data creep” – utilizing all available data regardless of user consent – and “scope creep” – neglecting to obtain explicit consent for specific data applications. This dual challenge is particularly pressing in an era where data collection and processing capabilities expand exponentially. The interconnected nature of modern AI systems means that data collected for one purpose can easily be repurposed for other applications, often without users’ knowledge or explicit consent.

Despite these concerns, a 2023 global survey of over 17,000 participants revealed that 85% believe AI can bring various benefits. However, this optimistic outlook is tempered by growing concerns about data protection and intellectual property rights. The rapid advancement of AI capabilities has created a complex landscape where existing laws struggle to address questions of liability and accountability among AI developers, end users, and implementing organizations. This legal uncertainty is particularly problematic in the context of intellectual property rights, where the boundaries between human and AI-generated content become increasingly blurred.

Algorithmic Bias

The third critical pitfall involves algorithmic bias, where machine learning systems perpetuate and potentially amplify existing human biases. In Holweg et al.’s (2022) analysis, this issue accounts for 30% of AI failures. Eder (2018) notes that algorithmic bias emerges when training data contains inherent human biases, including political, racial, and gender prejudices, resulting in outputs that reflect these skewed perspectives.

The challenge is particularly complex because algorithms lack the cognitive understanding necessary to grasp the contextual nature of protected variables such as age, race, gender, and sexual orientation. This limitation has led to high-profile controversies, such as issues with Amazon’s Rekognition technology and Google’s military AI applications. The problem is exacerbated by what researchers term “model creep,” where bias can emerge when customer preferences shift, and machine learning models are not retrained. This can lead to increasingly outdated and potentially biased predictions.

Mitigation strategies include obtaining more representative data, regular model updates, and mathematical de-biasing techniques. However, as Dickson (2018) argues, algorithmic bias ultimately reflects human prejudices, making it essential to address biases in society at large. Technical solutions alone cannot fully address these deeply rooted social issues.

The Problem of Explainability

The second major pitfall concerns AI explainability, often referred to as the “black box” problem. The primary challenge lies in humans’ inability to replicate or explain incorrect decisions made by machines (Nogrady, 2016). This issue is particularly pronounced with generative AI models, where outputs are inherently stochastic, making definitive explanations statistically challenging at best.

Recent research by Shumailov (2024) highlights a concerning trend: The indiscriminate use of model-generated content in training leads to irreversible defects in the resulting models, with certain aspects of the original content distribution disappearing entirely. This phenomenon, known as “model inbreeding,” raises significant concerns about the long-term sustainability of current AI development practices. The issue is further complicated by the challenges of recursive training, where models trained on synthetic data may perpetuate and amplify existing biases or errors. The role of synthetic data presents both opportunities and challenges in this context. While synthetic data can be valuable in specific applications, such as training autonomous vehicle systems when real data is scarce or unavailable (Alemohammad et al., 2023), it is not a universal solution to the growing demand for AI training data. As noted by NVIDIA, synthetic data can help fill specific data gaps and improve perception accuracy in autonomous vehicles, but human oversight remains crucial for ensuring safety and reliability.

While major technology companies like Microsoft and Google promote exploration tools to visualize prediction rationales, providing truly explainable AI (XAI) remains problematic without fully exposing system internals. Holweg et al. (2022) found that explainability-related failures account for 14% of AI controversies, highlighting the significance of this challenge. The tension between transparency and proprietary technology protection further complicates efforts to achieve meaningful explainability.

Understanding the Complex Web of Biases in AI Systems

The relationship between human and machine biases presents a fundamental challenge in artificial intelligence development. As highlighted in Chapter 2, this complexity emerges from the intricate interplay between human cognitive tendencies and technical limitations of AI systems, creating a cyclical pattern of bias reinforcement that demands systematic mitigation strategies. The cycle of bias perpetuation follows a clear pathway: social conditioning and personal experience create individual biases, which influence data collection and system design. This biased foundation then feeds into AI systems, creating what Holweg et al. (2022) describe as a risk of building inherently unfair systems that not only transfer societal biases but potentially amplify them through feedback loops.

Biases in AI systems manifest in three primary domains (Eppler, 2021): data gathering, analysis, and application. Data gathering challenges include selection bias and survivor bias, where the data collection process becomes skewed. Analysis-phase complications emerge through confirmation bias, outlier effects, and confounding variables, leading to what Eder (2018) identifies as systematic distortions in AI decision-making processes.

- Data Gathering Biases: Including selection bias and survivor bias

- Data Analysis Biases: Encompassing confirmation bias, outlier effects, confounding variables, normality bias, overfitting, and the Dunning-Krueger effect

- Data Application Biases: Including the correlation-causation fallacy and the curse of knowledge

Technical implementation introduces additional AI-specific biases. These include deployment bias (wrong application context), measurement bias (distorted success metrics), and label bias (prejudiced data categorization). Particularly concerning is automation bias, where humans tend to overestimate AI capabilities and neglect critical evaluation of outputs (Mishina et al., 2012). As noted in recent analyses of AI failures, adequate human oversight remains a crucial regulatory requirement to prevent automation bias.

Several types of biases affect AI systems:

- Deployment Bias: Incorrect application context leading to misaligned outputs

- Overfitting: Excessive alignment with sample data, reducing generalizability

- Selection Bias: Unbalanced data sampling that skews results

- Automation Bias: Overreliance on technology at the expense of human judgment

- Label Bias: Prejudiced assigned data categories

- Measurement Bias: Distorted success metrics

- Algorithmic Bias: Distorted coding approaches

Prevention of these biases requires a comprehensive approach. As demonstrated by the cases analyzed by Holweg et al. (2022), organizations must implement regular system audits, maintain diverse development teams, and establish robust testing protocols. However, the most crucial element remains human oversight – a key regulatory requirement that helps prevent automation bias and ensures AI systems remain accountable to human judgment and ethical considerations.

Societal Implications and Future Considerations

These technical challenges are compounded by broader societal concerns. The development of AI systems is often driven by capitalist motivations, potentially benefiting a select few at society’s expense. This raises questions about the social competency of those programming tomorrow’s world and the potential for creating societal problems that might otherwise have been avoided.

A recent study by the Gottlieb Duttweiler Institute highlights this disconnect, showing that consumers remain sceptical about whether AI’s productivity gains will translate into meaningful benefits for them (SRF, 2024). This scepticism is particularly relevant given the concept of bounded rationality in AI systems, where agents are designed to achieve the best possible outcome in any given scenario (Russell & Norvig, 2016). The divergence between organizational goals and societal benefits creates a tension that must be carefully managed.

The challenge of maintaining digital trust in AI systems requires a multi-faceted approach that addresses technical, ethical, and social considerations. As AI continues to evolve, the importance of developing robust frameworks for privacy protection, explainable AI, and bias mitigation becomes increasingly critical. These challenges translate into significant compliance risks that organizations must carefully navigate while striving to maintain public trust and technological advancement.

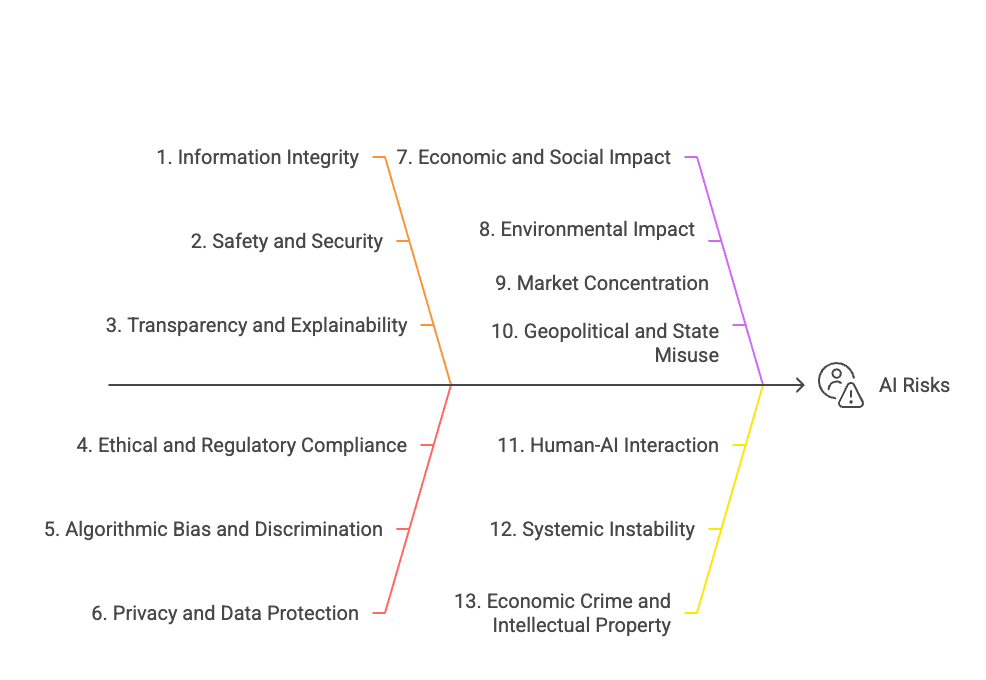

Ai Risk Framework

Our framework for AI risks clearly and structured categorizes the primary threats and issues associated with artificial intelligence. This framework enables researchers, practitioners, and policymakers to systematically identify, analyze, and manage potential negative impacts of AI technologies.

To develop this comprehensive framework, we first reviewed various AI-related failure types and real-world cases drawn from industry reports, academic literature, regulatory guidelines, and prominent expert analyses such as those found in sources like Harvard Business Review (Chakravorti, 2024),, the European Union’s AI Act, the National Institute of Standards and Technology (NIST) frameworks, and notable AI ethics publications. Using the MECE (Mutually Exclusive, Collectively Exhaustive) principle, a core consulting methodology, we refined and consolidated these AI risks. Each risk category was carefully defined to ensure no overlaps (mutual exclusivity) and to comprehensively cover all identified issues (collective exhaustiveness). Key AI failure types, such as privacy, bias and explainability, were explicitly mapped into each category to validate thoroughness and clarity. This structured approach leverages standard analytical techniques, including thematic analysis, cross-case validation, and iterative refinement.

1. Information Integrity Risk

Description:

Risks arising from AI-generated or AI-amplified false, misleading, or inaccurate information that undermines trust or decision-making.

Examples:

- Deepfakes used in misinformation campaigns

- Automated moderation falsely censoring accurate content

- Large language model (LLM) hallucinations providing incorrect advice

2. Safety and Security Risk

Description:

Risks involving AI systems causing or enabling physical harm, cybersecurity vulnerabilities, or critical infrastructure disruptions.

Examples:

- Autonomous vehicle accidents causing injuries

- AI-enabled cyberattacks on power grids

- Malfunctioning robotic surgical devices harming patients

3. Transparency and Explainability Risk

Description:

Risks related to opaque or inadequately explained AI decision-making processes that impair accountability and stakeholder trust.

Examples:

- AI-based financial systems making unexplained loan decisions

- Medical AI diagnostic tools failing to explain reasoning clearly

4. Ethical and Regulatory Compliance Risk

Description:

Risks arising from AI violating ethical standards, cultural norms, or regulatory and legal obligations.

Examples:

- AI systems used to evade compliance or commit bribery

- Unethical AI testing lacking informed consent

- AI surveillance technologies breaching privacy laws (e.g., GDPR)

5. Algorithmic Bias and Discrimination Risk

Description:

Risks involving AI systems systematically producing biased or unfair outcomes against specific demographic groups.

Examples:

- AI hiring algorithms disadvantaging minorities

- Facial recognition software disproportionately misidentifying people of color

6. Privacy and Data Protection Risk

Description:

Risks involving unauthorized or inappropriate AI-driven collection, use, or disclosure of personal or sensitive data.

Examples:

- Unauthorized biometric tracking via facial recognition

- AI-based identity verification without user consent

- Misuse of personal data for AI-driven profiling

7. Economic and Social Impact Risk

Description:

Risks involving significant negative economic or social consequences resulting from AI-driven automation, market disruption, or inequality.

Examples:

- Large-scale unemployment due to AI automation

- Increased social inequalities from unequal access to AI technologies

- Consumer rights violations due to AI-based business models

8. Environmental Impact Risk

Description:

Risks involving significant environmental harm from the resource-intensive development, deployment, or disposal of AI systems.

Examples:

- Excessive carbon emissions from training large AI models

- AI hardware generating significant electronic waste

9. Market Concentration Risk

Description:

Risks arising from AI-driven consolidation of market power, monopolistic practices, or anti-competitive behaviors.

Examples:

- AI-driven pricing manipulation in e-commerce platforms

- Monopolization of foundational AI models or cloud infrastructure (e.g., NVIDIA GPUs, AWS cloud)

- AI algorithms facilitating market manipulation

10. Geopolitical and State Misuse Risk

Description:

Risks involving misuse of AI technologies by governments or state actors for excessive surveillance, social control, manipulation, or geopolitical conflicts.

Examples:

- Mass surveillance enabled by AI facial recognition

- Predictive policing systems undermining civil liberties

- AI-powered propaganda influencing geopolitical stability

11. Human-AI Interaction Risk

Description:

Risks arising from inappropriate reliance on AI systems, flawed human-AI interactions, or erosion of critical human skills and judgment.

Examples:

- Pilots relying excessively on AI autopilots, leading to accidents

- Doctors experiencing reduced diagnostic skills due to overreliance on AI diagnostics

12. Systemic Instability Risk

Description:

Risks from unpredictable, unstable, or emergent AI behaviors causing cascading failures or broader systemic disruptions.

Examples:

- AI-based high-frequency trading triggering flash crashes

- Autonomous vehicles behaving unpredictably in complex urban environments, causing traffic disruptions

13. Economic Crime and Intellectual Property Risk

Description:

Risks involving AI-enabled financial deception, fraudulent activities, or infringement on intellectual property rights causing economic harm.

Examples:

- AI-powered identity fraud and fraudulent payment processing

- Misleading marketing practices using AI-generated claims

- Unauthorized replication and distribution of copyrighted content

2. Navigating Risks and Trust Challenges in an Evolving Technological Environment

The rapid advancement of artificial intelligence technologies has created a complex landscape where regulatory frameworks struggle to keep pace with technological innovation. This disconnect has led to significant compliance risks and trust challenges that organizations must navigate while implementing AI systems. The prevailing view is that AI has progressed far more rapidly than regulators have been able to address its associated risks. This analysis explores the key complications and tensions that characterize the current state of AI technology implementation.

Compliance Risks and Technical Challenges

The implementation of AI systems presents multiple compliance risks that organizations must address. According to recent audit findings, these risks manifest in several critical areas. Inaccurate or imprecise results can lead to a loss of customer trust and potential legal liability, particularly when AI systems influence accounting processes through Robotic Process Automation (RPA). Privacy protection threats, including violations of the “right to be forgotten”, present significant legal challenges that organizations must navigate.

A particularly concerning aspect is the potential for social discrimination and injustice through inappropriate practices and biases related to race, gender, ethnicity, age, and income. The complexity of learning algorithms, which often operate as “black boxes,” further complicates accountability and oversight. This lack of transparency can lead to a loss of accountability in decision-making processes and difficulties maintaining human oversight of AI systems. Furthermore, there are growing concerns about the potential for manipulation or malicious use of AI systems, including criminal activities, interference in democratic processes, and economic optimization that may harm societal interests.

Regulatory Response and Ethics Framework

In response to these challenges, various regulatory initiatives have emerged. The EU’s AI Act, enacted in August 2024, represents the first comprehensive regulation on AI and is expected to influence global standards similarly to the GDPR’s impact on data protection regulations. However, as Bengio et al. (2024b) note, current governance initiatives lack robust mechanisms and institutions to prevent misuse and recklessness, particularly concerning autonomous systems.

The concept of Trustworthy AI (TAI) has emerged as a framework to address these challenges. In early 2019, the Independent High-Level Expert Group on Artificial Intelligence of the European Commission released its Ethics Guidelines for Trustworthy AI. These guidelines rapidly gained traction in both research and practice, shaping the adoption of the term Trustworthy AI in various frameworks, including the OECD AI Principles (OECD 2019b) and the White House AI Principles (Vought 2020). Such frameworks are increasingly recognized as essential for developing AI systems that can be safely integrated into society while maintaining public trust. They emphasize that individuals, organizations, and societies can only unlock AI’s full potential if trust is established in its development, deployment, and use (Thiebes et al., 2021; Jacovi et al., 2021). Principles for TAI are subject to another section below.

The Australian government provides an example for an extensive policy for the responsible use of AI in government (2024). Their policy (accessible here) is translated into more actionable standards for implementation (e.g. regarding transparency).

Global Regulatory Landscape and Regional Approaches

The absence of a unified global framework for AI regulation has led to regional approaches. While the EU leads with comprehensive legislation, other regions are developing their own regulatory frameworks. This fragmentation creates additional challenges for organizations operating internationally, as they must navigate varying compliance requirements across jurisdictions.

The OECD (2019a) has established several principles for responsible AI development. These principles have influenced regulatory development worldwide, though implementation approaches vary significantly by region. Countries increasingly see comparative advantages tied to economic drivers, making convergence to a global approach unlikely. Instead, regional centres of excellence are emerging, each with distinct regulatory emphases and priorities.

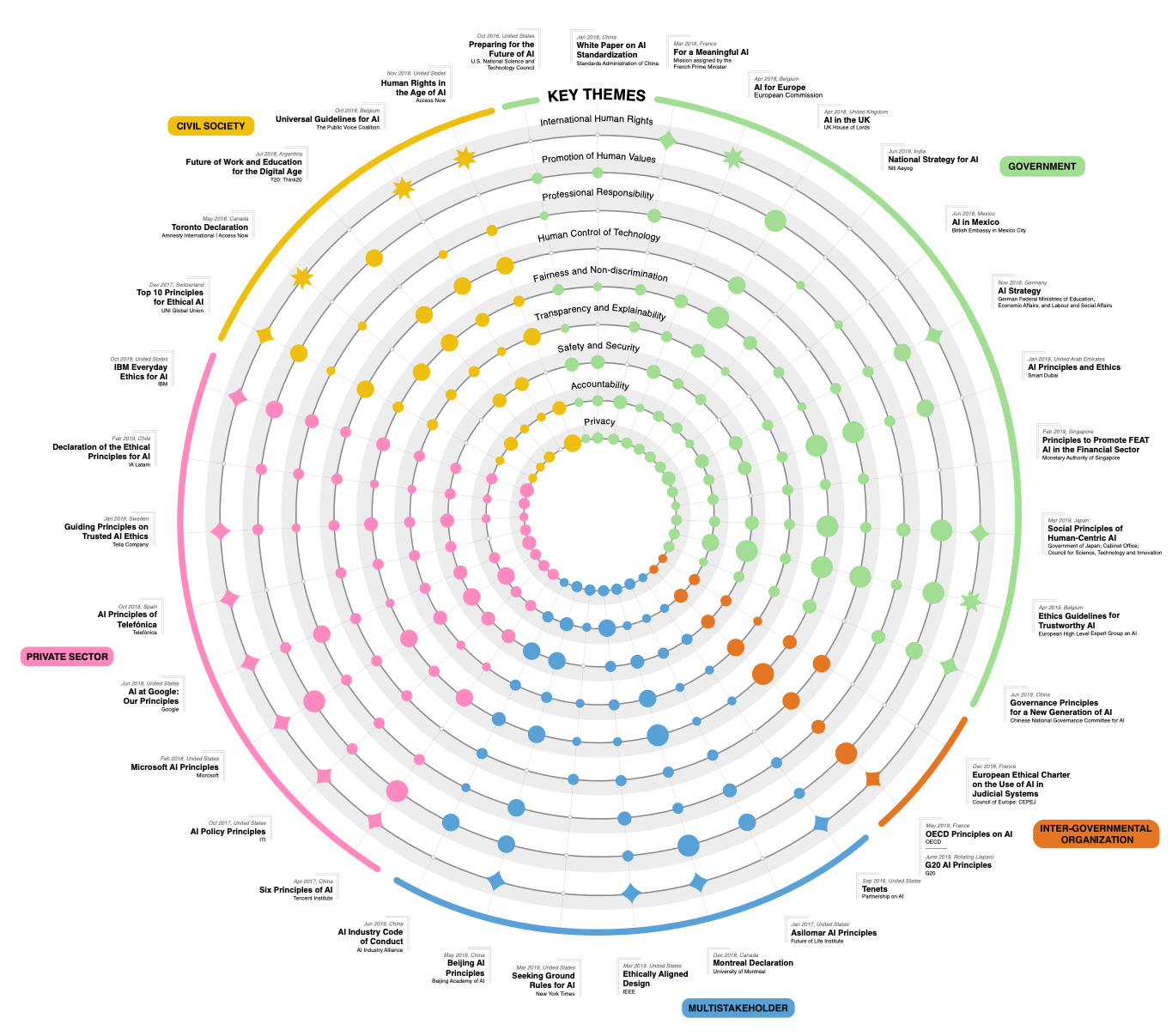

Today, numerous organizations have developed AI principles to promote ethical, rights-respecting, and socially beneficial AI. A study analyzing 36 prominent AI principles documents identified emerging sectoral norms despite differences in authorship, audience, scope, and cultural context. These documents come from governments, intergovernmental organizations, private companies, advocacy groups, and multi-stakeholder initiatives, each with varying objectives- ranging from national AI strategies to internal governance or advocacy agendas. Despite variations in focus, these themes, as outlined below, highlight a shared vision for responsible AI governance across different sectors and geographies (Fjeld et al., 2020).

.

Must promote inclusivity & mitigate biases.

Must respect privacy, ensuring data protection & user control.

Mechanisms to distribute responsibility & provide remedies

Clarity on usage and decision-making.

Safeguarded against unauthorized interference.

Act with integrity and long-term foresight.

Critical decisions remain under human oversight.

Align with fundamental human values and well-being.

Stakeholder Perceptions and Trust Dynamics

Holweg et al. (2022) provide a valuable framework for understanding stakeholder perceptions of AI failures, identifying two crucial dimensions: capability and character. Stakeholders assess organizations based on their technical competence (capability), values, and priorities (character). Their research reveals that privacy-related AI failures are predominantly attributed to perceived bad character (43% of cases), while bias-related failures are more commonly linked to capability shortfalls (24% of cases). For more details on definitions, conceptualizations, and operationalizations for the complex construct of organizational reputation, refer to Lange & Lee (2010).

Capability: Given that most stakeholders have limited access to such information as resources owned by an organization, appropriateness of the algorithms and quality of the training data, they tend to judge the capability of the organization based on whether the AI system works or not, that is, how accurate, reliable and robust the AI system is. “Capability reputation is about whether the firm makes a good product or provides a good service” (Bundy, 2021)

These findings have significant implications for organizations implementing AI systems. Understanding how stakeholders perceive different types of AI failures can help organizations develop more effective response strategies and proactive measures to maintain trust. This is particularly important as explainability failures are increasingly attributed to a perceived lack of technical competence rather than intentional opacity.

Social Adaptation and Institutional Trust

The growing importance of social adaptation and institutional trust in AI implementation cannot be overstated. As Glikson (2020) notes in their analysis of human trust in artificial intelligence, the development of trust in AI systems depends heavily on perceived consensus about cooperative norms. This understanding has led to increased focus on developing social adapters that can help bridge the gap between AI capabilities and human expectations.

Trust within human-machine collectives depends on the perceived consensus about cooperative norms (Makovi et al., 2023)

The evolving trust dynamics within the iceberg model affect generations in distinct ways. Research by Hoffmann et al. (2014) suggests that Digital Natives, while strongly influenced by brand perception and associated signals, demonstrate greater receptivity to structural trust-building measures in technological environments. In contrast, older generations – Digital Immigrants – exhibit more difficulty in adapting to new “situation normality” paradigms, typically prioritizing a careful assessment of the risk-benefit ratio before establishing trust. This generational divide in trust formation mechanisms suggests that organizations may need to adopt differentiated approaches to building and maintaining trust across different age demographics.

Trust in artificial intelligence systems develops within a complex framework where institutional structures and social adaptation mechanisms play crucial roles. Institution-based trust, a fundamental element of the iceberg model, encompasses the structural requirements necessary for creating and maintaining a trustworthy environment for AI implementation. These structural elements are primarily manifested through regulatory frameworks and governance mechanisms.

Current regulatory approaches show significant regional variations, particularly between the European Union’s comprehensive regulatory efforts and the comparatively less stringent approaches in China and the United States. This divergence in regulatory frameworks poses challenges for building consistent global trust in AI systems. The EU’s approach, exemplified by the AI Act, represents a structured attempt to establish clear guidelines and requirements while other regions maintain more flexible regulatory environments (Bengio et al., 2023).

The emergence of explainable AI (XAI) and trustworthy AI (TAI) frameworks represents a significant advancement in addressing these challenges. These frameworks serve as crucial components of the social adapter mechanism, which is gaining increasing importance in the AI trust landscape. The social adapter encompasses innovative strategic elements within the technological space that shape how consumers perceive both structural assurance and situation normality in AI interactions.

This dual approach – combining institutional frameworks with social adaptation mechanisms – creates a more comprehensive foundation for trust-building in AI systems. The social adapter’s role is particularly crucial as it helps bridge the gap between technical capabilities and user expectations, influencing how stakeholders perceive and interact with AI systems (Holweg et al., 2022). This perception encompasses both the technical competence of AI systems and their alignment with societal values and norms.

3. From Principles to Action: Ensuring Trustworthy AI

The implementation of trustworthy artificial intelligence (TAI) requires a comprehensive approach that bridges theoretical principles with practical action. As Bryson (2020) argues, AI development produces material artefacts that require the same rigorous auditing, governance, and legislation as other manufactured products. This perspective frames our understanding of moving from abstract principles to concrete implementation of trustworthy AI systems.

Framework and Principles

As detailed above in this text, the European Union’s framework for trustworthy AI formed the foundation for the EU AI Act, establishing four fundamental principles: respect for human autonomy, prevention of harm, fairness, and explicability. These principles are operationalized through seven key requirements: human agency and oversight, technical robustness and safety, privacy and data governance, transparency, diversity and non-discrimination, societal and environmental well-being, and accountability.

System trustworthiness properties, as outlined by Pavlidis (2021), emphasize that a trustworthy system must be capable of meeting not only stated customer needs but also unstated and unanticipated requirements. This understanding is particularly crucial given Bengio et al.’s (2024) warning that powerful frontier AI systems cannot be considered “safe unless proven unsafe,” highlighting the need for proactive safety measures.

AI and machine learning occurs through design and produces a material artifact; auditing, governance, and legislation should be applied to correct sloppy or inadequate manufacturing, just as we do with other products (Bryson, 2020).

Despite widespread discussions on AI ethics, there remains a significant gap between ethical principles and real-world AI practices. Developers and companies often fail to integrate ethics into their workflows. Studies show that even when explicitly instructed, software engineers and AI practitioners do not change their development practices to align with ethical guidelines, revealing a systemic issue in which ethics remain theoretical rather than actionable (McNamara et al., 2018; Vakkuri et al., 2019). This is a worrying situation because organisations are the first line of defence in protecting personal privacy, avoiding biases, and ensuring that nudging is not overused or misused (Sattlegger & Nitesh, 2024).

While efforts are underway to operationalize AI ethics – such as through ethics boards and collaborative codes – translating high-level principles into concrete technological implementations remains a daunting challenge (Munn, 2022). Clearly, these admirable principles do not enforce themselves, nor are there any tangible penalties for violating them (Calo, 2021). This is further complicated by competing ethical demands, technical constraints, and unresolved questions of fairness, privacy, and accountability, which require ongoing social, political, and technical engagement rather than simplistic technical fixes.

The figure presents key properties of trustworthy systems as identified in the literature, highlighting various dimensions such as tangibility, transparency, reliability, and other behavioural and structural characteristics that influence cognitive trust in AI (Glikson, 2020). Our model incorporates these attributes, aligning with the framework proposed by Palvidis (2021), who, in turn, builds upon the foundational work of Hoffman et al. (2006).

Two key aims emerge: transparency, which ensures visibility into how AI systems function through tools like auditing frameworks and model reporting, and accountability, which establishes mechanisms to address harms through governance, enforcement, and community-driven redress. A broad range of stakeholders – including developers, managers, business leaders, policymakers, and professional organizations – must collaborate to integrate ethical AI practices into design, oversight, and regulation. By combining transparency with accountability, this approach moves beyond abstract ethical principles toward practical and enforceable AI governance.

While ethical principles and guidelines have been widely discussed (Floridi & Cowls, 2019; Jobin et al., 2019), they lack enforceability, rendering them ineffective in regulating AI systems. Regulatory approaches may fail to provide adequate guidance for building public trust in AI. Instead, a shift toward enforceable AI governance frameworks – away from toothless principles – is necessary to align legal structures with the evolving nature of human-machine collaboration (Calo. 2021). Without tangible penalties for violations, organizations and developers may have little incentive to adhere to them (Mittelstadt et al., 2016).

Given the rapid integration of AI into various domains, we face two possible paths: A) Ignore AI’s transformative potential and allow technological advancements to unfold without legal adaptation. B) Recognize that AI fundamentally alters human affordances, requiring an evolution of legal structures to account for new human-AI interactions (Binns, 2018). This chapter advocates for the latter approach and outlines the necessary first steps: Enforceable AI governance requires the elaboration of three dimensions:

1 Human-AI Synergy:

Understanding Roles and Enhancing Collaboration

AI should augment, not replace, human decision-making—especially in high-risk areas such as healthcare, finance, and criminal justice. Effective collaboration requires clear role definition, explainability, and human oversight to ensure AI remains an assistant rather than an unchecked decision-maker.

2 AI Alignment & Transparency:

Refining, Guiding, & Managing AI Systems

AI must be aligned with human values, transparent in its operations, and continuously monitored to ensure fairness, reliability, and adaptability. This requires real-time oversight, dynamic governance, and continuous improvement through transparent AI development practices.

3 Accountability & Oversight:

Establishing Responsibility & Redress Mechanisms

AI accountability demands clear ownership, governance structures, and legal frameworks to ensure responsible use, prevent harm, and enable redress for affected individuals. Organizations must embed legal, technical, and operational mechanisms to enforce accountability across AI development and deployment.

Building on the figure “Properties of trustworthy systems and operationalizable aims,” it is essential to operationalize our relatively abstract principles further for trustworthy AI. The objectives of transparency and accountability must be addressed through a multidimensional approach encompassing legal, technical, and human perspectives. Each dimension comprises a non-exhaustive set of instruments that collectively contribute to establishing a genuinely enforceable AI governance framework.

- Establishing clear boundaries of AI decision-making authority (Russell, 2019).

- Ensuring human oversight in critical applications such as healthcare and legal decision-making (Danks & London, 2017).

Know your place

Discussions on the future of human labour are pervasive and widely debated across various disciplines. Rather than viewing AI as a replacement for human labour, a more nuanced understanding reveals its potential as a catalyst for more meaningful and fulfilling work. This perspective emphasizes AI’s capacity to handle repetitive and mundane tasks, freeing humans to focus on activities that leverage their unique qualities, such as creativity, critical thinking, and emotional intelligence.

Daugherty and Wilson (2024) present a comprehensive framework for understanding this human-AI relationship through their “Missing Middle” model. This model identifies three distinct categories of work: human-only activities, human-machine hybrid activities, and machine-only activities. Human-only activities encompass leadership, empathy, creativity, and judgment – capabilities that remain uniquely human. Machine-only activities include transactions, iterations, predictions, and adaptations, where AI’s computational power excels.

The most interesting developments occur in the hybrid space, what Daugherty and Wilson term “the Missing Middle.” This space is divided into two key areas: humans complementing machines and machines augmenting human capabilities. In the first category, humans serve as trainers, explainers, and sustainers. Trainers educate AI systems on appropriate values and performance parameters, ensuring that these systems reflect desired behaviours and ethical principles. Explainers or translators bridge the communication gap between technical and business domains, making complex AI systems comprehensible to non-technical stakeholders. Sustainers focus on maintaining system quality and ensuring long-term value through principles of explainability, accountability, fairness, and symmetry.

On the other side of this hybrid space, AI systems enhance human capabilities through amplification, interaction, and embodiment. Amplification allows professionals to process and analyze vast amounts of data, enabling more informed strategic decision-making. Interaction capabilities, particularly through natural language processing, create more intuitive human-machine interfaces. Embodiment represents the physical collaboration between humans and robots in shared workspaces, enabled by advanced sensors and actuators.

This framework suggests that the future of work lies not in competition between humans and machines but in their complementary collaboration. Organizations that understand and effectively implement this model can create more productive and fulfilling work environments, where both human and artificial intelligence contribute their unique strengths to achieve superior outcomes.

Actionable instruments supporting human-AI synergy

Human-in-the-Loop (HITL) Decision-Making

Integrating human oversight into AI processes ensures that critical decisions, especially in high-stakes domains, are reviewed and validated by humans (Van Rooy & Vaes, 2024, Bansal et al., 2019). This approach leverages the strengths of both human judgment and AI efficiency.

Implementation Strategies:

- Risk-Based Oversight: Implement tiered oversight models where the level of human intervention corresponds to the associated risk of AI decisions.

- Decision Thresholds: Establish specific criteria that dictate when human intervention is necessary, ensuring that AI operates within predefined boundaries.

AI Explainability and Interpretability

Ensuring that AI systems are transparent and their decision-making processes are understandable to human collaborators is crucial for trust and effective collaboration (Hemmer et al., 2024).

Implementation Strategies:

- Model Documentation: Utilize standardized documentation methods, such as datasheets and model cards, to provide comprehensive information about AI models’ purposes, data sources, performance metrics, and limitations.

- Explainability Tools: Employ tools like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) to elucidate AI decision-making processes.

Human–Computer Interaction (HCI) for AI Systems Design

HCI for AI systems focuses on designing interfaces and interactions that optimize human engagement, usability, and trustworthiness in AI-powered technologies (Ehsan & Riedl, 2020). Ensuring that AI systems are transparent, understandable, and reliable is crucial to fostering user confidence.

Implementation Strategies:

- User-Centered Design (UCD): Design AI around user needs through research, prototyping, and usability testing. Measure AI success based on usability, not just accuracy.

- Trust Calibration & Confidence Management:

Confidence scores: Show how specific AI is about its predictions. Use adaptive trust levels: Let users customize AI automation based on their trust preference.

Context-Aware AI Systems

Developing AI systems that adapt to the context and expertise level of human users enhances collaboration and ensures that AI provides relevant support without overwhelming the user (Zheng et al., 2023).

Implementation Strategies:

- User Profiling: Design AI systems that can assess and adapt to the user’s knowledge level, providing assistance that complements the user’s expertise.

- Dynamic Interaction Models: Create interfaces that allow users to adjust the level of AI assistance based on the task complexity and their comfort level.

Training and AI Literacy Programs

Educating stakeholders about AI capabilities, limitations, and ethical considerations fosters a culture of informed collaboration and trust in AI systems (Sturm et al., 2021).

Implementation Strategies:

- Comprehensive Training Programs: Develop curricula that cover AI fundamentals, ethical issues, and practical applications tailored to different stakeholder groups.

- Continuous Learning Platforms: Implement platforms that provide ongoing education and updates on AI developments, ensuring stakeholders remain informed about the latest advancements and best practices.

Ethical Role-Based AI Governance

Defining clear roles and responsibilities within AI systems ensures that ethical considerations are integrated into AI operations, preventing misuse and promoting accountability (Te’eni et al., 2023).

Implementation Strategies:

- Responsibility Matrices: Develop frameworks that delineate the responsibilities of AI systems and human operators, ensuring clarity in decision-making processes.

- Ethical Guidelines: Establish and enforce ethical guidelines that govern AI behaviour, aligning with organizational values and societal norms.

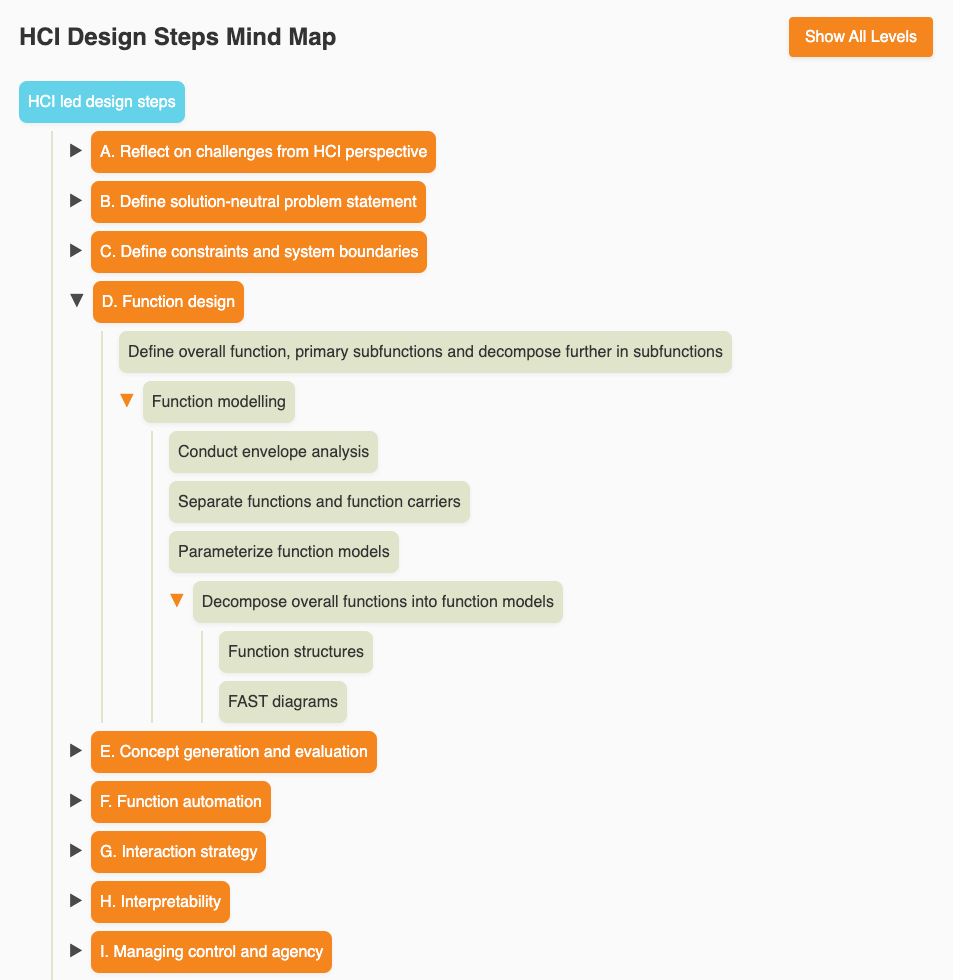

Human-Computer Interaction (HCI) in AI System Design: A Comprehensive Framework

Human-computer interaction has become increasingly crucial in the age of AI. While traditional HCI focused on designing interfaces for deterministic systems, modern AI systems present unique challenges due to their complexity, autonomy, and often unpredictable nature. As AI systems become more sophisticated and pervasive, the need for thoughtful, human-centred design approaches becomes paramount to ensure these systems remain understandable, controllable, and aligned with human needs and values (Amershi et al., 2019).

The challenges in AI system design extend beyond traditional usability concerns. Users must understand AI capabilities and limitations, maintain appropriate levels of trust, and effectively collaborate with systems that can learn and adapt. These considerations have led to the development of specialized HCI-led design approaches that address the unique characteristics of AI systems. Recent work by Yang et al. (2020) emphasizes the importance of re-thinking traditional HCI methods for AI systems, particularly in areas of transparency, trust calibration, and interactive machine learning. The framework presented here provides a structured approach to addressing these challenges while maintaining a focus on human needs and capabilities. It is based on a course conducted in 2024 by Cambridge’s Professor Per Ola Kristensson.

Key Components of HCI-led Design for AI Systems

A. Initial HCI perspective: The design process addresses four fundamental challenges: control, interpretability, agency, and governance. Control focuses on how users can guide and influence AI system behaviour. Interpretability ensures users can understand system decisions and actions. Agency determines the balance of autonomy between the user and the system. Governance establishes frameworks for responsible AI deployment and usage (Shneiderman, 2020).

B. Problem definition and constraints: A solution-neutral problem statement helps avoid premature commitment to specific technologies. This approach considers various abstraction levels and system boundaries, incorporating user-elicited requirements alongside technical, business, and regulatory constraints. The target audience analysis considers behavioural, technological, and demographic factors influencing system adoption and usage.

C to F. Function design and function automation. Design decomposition helps manage complexity by breaking down overall system functionality into manageable components. The automation framework follows a systematic approach to determining appropriate levels of automation, considering both human and AI capabilities. This includes strategies for maximizing automation efficiency while maintaining human oversight where necessary (Parasuraman et al., 2000).

G. Interaction strategy. Mixed-initiative interaction, as described by Horvitz (1999), provides a framework for balancing system autonomy with user control. This approach considers utility, balance, control, and uncertainty in determining when and how the system should take initiative. The concept of alignment and teaming (Bansal et al., 2019) emphasizes the importance of creating effective human-AI partnerships.

H. to I. Interpretability and control. Modern AI systems require multiple levels of interpretability evaluation: application-grounded (with domain experts), human-grounded (with general users), and functionally-grounded (technical evaluation). The H-metaphor for shared control, inspired by horse-rider interaction, provides an intuitive framework for understanding different control modes. This includes “loose rein” control for familiar situations and “tight rein” control for uncertain or critical scenarios (Flemisch et al., 2003).

J. Risk assessment and safety. A comprehensive approach to risk assessment considers the dynamic system boundary, including user interfaces, applicable rules, principals (personas), agents, regulations, economic factors, and training data. The framework employs various assessment methods, such as SWIFT (Structured What-If Technique) and FMEA (Failure Mode and Effects Analysis), to identify and mitigate potential risks.

K. Ethics and Governance. Ethical considerations are integrated throughout the design process, with particular attention to potential human errors using the Skills, Rules, and Knowledge (SRK) model (Rasmussen, 1983). This helps ensure AI systems support human capabilities rather than introducing new risks or limitations.

AI Steering: Improving and Controlling AI Systems

A Maturity-Based Approach to Implementation

The journey of implementing and improving AI systems follows a natural progression that aligns with organizational maturity and resource availability. Understanding this progression is crucial for organizations to make informed decisions about their AI development strategy (Bommasani et al., 2021).

1 Prompt Engineering: Entry-Level Enhancement Prompt engineering represents the most accessible starting point for AI improvement, requiring relatively minimal technical infrastructure and investment. This approach focuses on optimizing the interaction with existing AI models through carefully crafted inputs. Effective prompt engineering can significantly improve model performance without model modifications, though improvements vary greatly depending on the specific task and context (Liu et al., 2022).

Complexity: Low to Medium Time Investment: Days to Weeks

Required Expertise: Domain knowledge and basic AI understanding

Infrastructure Needs: Minimal

2 Retrieval Augmented Generation (RAG): Intermediate Enhancement RAG represents a significant step up in complexity while offering substantial improvements in AI system performance. This approach combines the power of large language models with custom knowledge bases, enabling more accurate and contextually relevant outputs (Lewis et al., 2020).

Complexity: Medium to High Time Investment: Weeks to Months

Required Expertise: Software engineering, data engineering, ML basics

Infrastructure Needs: Moderate

- Vector databases

- Document processing pipelines

- API integration capabilities

3 Fine-tuning Open Source Models: Advanced Implementation Fine-tuning represents a more sophisticated approach requiring substantial technical expertise and computational resources. This method allows organizations to adapt existing models to specific use cases while leveraging pre-trained capabilities (Wei et al., 2022).

Complexity: High Time Investment: Months

Required Expertise: Machine learning engineering, MLOps

Infrastructure Needs: Substantial

- GPU/TPU resources

- Training infrastructure

- Model versioning systems

4 Pre-training Custom Models: Expert-Level Implementation Pre-training custom models represent the most complex and resource-intensive approach to AI improvement. This method provides maximum flexibility and potential for innovation but requires significant organizational maturity in AI capabilities (Brown et al., 2020).

Complexity: Very High Time Investment: Months to Years

Required Expertise: Advanced ML research, distributed systems

Infrastructure Needs: Extensive

- Large-scale distributed computing

- Substantial data storage

- Advanced monitoring systems

Actionable instruments supporting AI alignment & transparency

AI must be aligned with human values, transparent in its operations, and continuously monitored to ensure fairness, reliability, and adaptability. This requires real-time oversight, dynamic governance, and continuous improvement through transparent AI development practices.

Algorithmic Impact Assessments (AIA)

AIAs help evaluate the ethical, social, and safety risks of AI models before deployment, ensuring alignment with organizational and societal values (Reisman et al., 2018).

Implementation Strategies:

- Develop structured evaluation frameworks to analyze the risks of AI models before they go live.

- Conduct pre-deployment and post-deployment AIAs to assess long-term AI behaviour.

- Require organizations to publicly disclose AI impact statements for high-risk AI applications.

Bias, Fairness & Robustness Audits

These audits ensure that AI models operate without bias, maintain fairness, and perform reliably across diverse conditions (Mitchell et al., 2019).

Implementation Strategies:

- Conduct automated bias detection and fairness testing using standardized fairness metrics (e.g., demographic parity, equalized odds).

- Utilize AI fairness tools like IBM AI Fairness 360 and Google’s What-If Tool.

- Perform regular adversarial testing and stress testing to assess AI robustness.

AI Model Version Control & Continuous Monitoring

Tracking model updates, training data modifications, and decision logic ensure accountability and consistency in AI behaviour (Amershi et al., 2019).

Implementation Strategies:

- Use version control systems to track changes in model architecture and training datasets.

- Implement automated monitoring dashboards that flag deviations in AI behaviour.

- Set up automated alerts for shifts in AI model performance due to concept drift or unexpected correlations.

Data Provenance & Traceability

Ensuring transparent data sourcing, preprocessing, and labelling prevents hidden biases and maintains data integrity (Gebru et al., 2021).

Implementation Strategies:

- Maintain audit logs for every stage of data processing.

- Use blockchain or cryptographic hash functions to track data lineage.

- Data documentation (Datasheets for Datasets) must disclose potential biases and limitations.

Transparency Reports & Disclosure Requirements

AI systems that impact critical decisions should have publicly available transparency reports that explain decision logic, risk assessments, and system limitations (Raji et al., 2021).

Implementation Strategies:

- Organizations must publish AI transparency reports detailing their models’ intended purpose, data sources, fairness measures, and real-world performance.

- Ensure public disclosure of AI system limitations in critical domains (e.g., predictive policing, facial recognition).

- Develop AI fact sheets that summarize key ethical concerns and technical limitations.

- Implement sector-specific disclosure requirements in finance, healthcare, criminal justice, and hiring systems.

Stress Testing & Adversarial Resilience

AI should be stress-tested under extreme conditions to ensure robustness, security, and fairness in real-world applications Carlini et al., 2019).

Implementation Strategies:

- Perform synthetic stress tests where AI is exposed to worst-case scenarios.

- Use adversarial training techniques to prevent AI manipulation.

- Conduct external red teaming exercises to identify vulnerabilities in AI models.

Actionable instruments supporting accountability & oversight

AI accountability requires clear ownership, governance structures, legal frameworks and accountability mechanisms to ensure responsible use, prevent harm, and enable redress for affected individuals (Zerilli et al., 2019). Organizations must embed legal, technical, and operational mechanisms to enforce accountability across AI development and deployment.

Legal Liability & Redress Frameworks

Establish clear accountability structures for AI-related harms, ensuring individuals and organizations can seek redress when AI systems cause unintended consequences (Ge & Zhu, 2024).

Implementation Strategies:

- Define legal liability assignments for developers, deployers, and users when AI systems cause harm.

- Establish dispute resolution mechanisms for AI-related incidents, including mediation and legal redress.

- Align liability frameworks with existing regulatory requirements (e.g., EU AI Act, GDPR).

Internal & External AI Audits & Certifications

Ethics-based auditing (EBA) is a systematic approach to evaluate an entity’s past or current actions to ensure alignment with ethical principles or standards (Mökander & Floridi, 2022). Independent audits enhance trust, transparency, and regulatory compliance, preventing unchecked AI risks (Raji et al., 2022).

Implementation Strategies:

- A systematic process for evaluating an entity’s past or present behaviour to ensure alignment with relevant principles or norms.

- Mandate third-party AI audits to validate AI compliance with transparency, fairness, and ethical standards.

- Develop industry certifications for ethical AI, similar to ISO 42001 or NIST AI RMF.

- Require companies to disclose audit results to ensure public transparency.

User Rights & AI Recourse Mechanisms

Allow individuals affected by AI decisions to contest, appeal, and seek remedies for unjust outcomes (Wachter et al., 2017).

Implementation Strategies:

- Implement right-to-appeal processes for users who experience negative AI-driven outcomes (e.g., denied loans, unfair job rejections).

- Require explainability mechanisms (e.g., model cards, SHAP, LIME) so users understand why a decision was made.

- Ensure corrective measures are in place, enabling organizations to reverse or adjust AI decisions when errors occur.

AI Ethics Review Boards

Independent oversight committees ensure that AI deployments align with ethical principles, regulatory requirements, and social responsibility (Lechterman, 2023).

Implementation Strategies:

- Establish multidisciplinary AI Ethics Review Boards within organizations and regulatory bodies.

- Require review boards to assess major AI deployments before public release, ensuring ethical compliance.

- Promote public participation in AI governance, allowing external experts and affected communities to contribute.

Whistleblower & Incident Reporting Mechanisms

Provide secure channels for employees and users to report AI-related harms, biases, or ethical concerns (Brundage et al., 2020).

Implementation Strategies:

- Implement confidential reporting systems within organizations for AI-related ethical concerns.

- Establish government-backed AI ethics hotlines for public whistleblowing related to AI misuse.

- Ensure legal protections for whistleblowers who expose AI-related discrimination or harm.

Regulatory Alignment & Compliance

Ensure AI systems adhere to international and sector-specific regulatory frameworks, including ISO 42001 (AI Management Systems), the EU AI Act, GDPR, and NIST AI RMF, with transparent audits and compliance reporting (Jobin et al., 2019, European Commission, 2021).

Implementation Strategies:

- AI Regulatory Compliance Audits

- Impact-Based AI Regulation Frameworks

- Transparency in AI Compliance Reporting

- AI Risk Mitigation & Legal Accountability

- refer also to the tool below

The Challenge of AI Accountability and Regulatory Compliance

Establishing accountability and building effective regulatory compliance frameworks for AI systems presents unique challenges due to several interconnected factors. Krafft et al. (2020) identify three fundamental challenges: the complexity of AI systems, the dynamic nature of AI development, and the distributed responsibility across multiple stakeholders.

The first major challenge lies in the technical complexity of AI systems. Modern AI, particularly deep learning models, often operates as “black boxes” where the relationship between inputs and outputs isn’t easily interpretable. Rudin (2019) highlights how this opacity makes it difficult to attribute responsibility when systems produce undesired outcomes. Traditional accountability mechanisms, designed for deterministic systems, struggle to address the probabilistic nature of AI decision-making.

A second critical challenge involves the dynamic and evolving nature of AI systems. Unlike traditional software, many AI systems continue to learn and adapt after deployment. Morley et al. (2019) highlight how this creates particular challenges for translating ethical principles into practice, as system behaviours may change over time, making it difficult to maintain consistent accountability frameworks.

The distributed nature of AI development and deployment further complicates accountability. Modern AI systems often involve multiple stakeholders: data providers, model developers, system integrators, and end-users. Cath et al. (2017) point out how this distributed responsibility creates challenges in attributing accountability when issues arise. Traditional legal and regulatory frameworks, designed for clear lines of responsibility, struggle to address these complex webs of interaction.

Nevertheless, building self-reinforcing regulatory compliance frameworks is essential for further developing and applying the technology. The following key barriers must be addressed and overcome (Singh et al., 2022):

-

- The rapid pace of AI advancement often outpaces regulatory development

- The global nature of AI development makes it difficult to enforce consistent standards

- The tension between innovation and regulation creates resistance to comprehensive frameworks

Despite these challenges, recent research suggests potential paths forward. Floridi et al. (2020) propose a “design for values” approach that embeds ethical considerations and compliance requirements into the development process itself. This proactive approach might help create more naturally self-reinforcing regulatory systems.

In this “value led” sense: Start by using our resources map:

References Chapter 8:

Alemohammad, S., Casco-Rodriguez, J., Luzi, L., Humayun, A. I., Babaei, H., LeJeune, D., Siahkoohi, A., & Baraniuk, R. G. (2023). Self-Consuming Generative Models GO MAD. arXiv.org. https://arxiv.org/abs/2307.01850

Amershi, S., Chickering, D. M., Drucker, S. M., Lee, B., Simard, P., & Suh, J. (2019). ModelTracker: Redesigning performance analysis tools for machine learning. Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI), 1-12. https://doi.org/10.1145/3290605.3300873

Amershi, S., Weld, D., Vorvoreanu, M., Fourney, A., Nushi, B., Collisson, P., Suh, J., Iqbal, S., Bennet, P. N., Inkpen, K., Teevan, J., Kikin-Gil, R., Horvitz, E., (2019). Guidelines for Human-AI Interaction. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI ’19). Association for Computing Machinery, New York, NY, USA, Paper 3, 1–13. https://doi.org/10.1145/3290605.3300233

Arrieta, A. B., Díaz-Rodríguez, N., Del Ser, J., Bennetot, A., Tabik, S., Barbado, A., … & Herrera, F. (2020). Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Information Fusion, 58, 82-115. https://doi.org/10.1016/j.inffus.2019.12.012

Australian Government. (2024). Policy for the responsible use of AI in government. digital.gov.au. Available: https://www.digital.gov.au/policy/ai/policy [2025, March 17]

Baldwin, R., Cave, M., & Lodge, M. (2012). Understanding regulation: Theory, strategy, and practice. Oxford University Press.

Bansal, G., Nushi, B., Kamar, E., Lasecki, W. S., Weld, D. S., & Horvitz, E. (2019). Beyond accuracy: The role of Mental Models in Human-AI Team performance. Proceedings of the AAAI Conference on Human Computation and Crowdsourcing, 7, 2–11. https://doi.org/10.1609/hcomp.v7i1.5285

Bansal, G., Nushi, B., Kamar, E., Weld, D. S., Lasecki, W. S., & Horvitz, E. (2019). Updates in Human-AI Teams: Understanding and Addressing the Performance/Compatibility Tradeoff. Proceedings of the AAAI Conference on Artificial Intelligence, 33(01), 2429–2437. https://doi.org/10.1609/aaai.v33i01.33012429

Bengio, Y., de Leon Ferreira de Carvalho, A. C. P., Fox B., Nemer, M., Rivera, R. P., Zeng, Y., … & Khan, S. M. (2025). International AI Safety Report 2025. UK Department for Science, Innovation and Technology and AI Safety Institute. https://www.gov.uk/government/publications/international-ai-safety-report-2025

Bengio, Y., Hinton, G., Yao, A., Song, D., Abbeel, P., Darrell, T., Harari, Y. N., Zhang, Y., Xue, L., Shalev-Shwartz, S., Hadfield, G., Clune, J., Maharaj, T., Hutter, F., Baydin, A. G., McIlraith, S., Gao, Q., Acharya, A., Krueger, D., . . . Mindermann, S. (2024b). Managing extreme AI risks amid rapid progress. Science, 384(6698), 842–845. https://doi.org/10.1126/science.adn0117

Bengio, Y., Mindermann, S., Privitera, D., Besiroglu, T., Bommasani, R., Casper, S., … & Zhang, Y. (2024). International Scientific Report on the Safety of Advanced AI (INTERIM Report). arXiv preprint. https://arxiv.org/abs/2412.05282

Binns, R. (2018). Fairness in machine learning: Lessons from political philosophy. Proceedings of the 2018 Conference on Fairness, Accountability, and Transparency. Available: https://proceedings.mlr.press/v81/binns18a.html [2025, January 31]

Brundage, M., Avin, S., Wang, J., Belfield, H., Krueger, G., Hadfield, G., Khlaaf, H., Yang, J., Toner, H., Fong, R., Maharaj, T., Koh, P. W., Hooker, S., Leung, J., Trask, A., Bluemke, E., Lebensold, J., O’Keefe, C., Koren, M., . . . Anderljung, M. (2020, April 15). Toward trustworthy AI Development: Mechanisms for supporting verifiable claims. arXiv.org. https://arxiv.org/abs/2004.07213

Brown, T. B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D. M., Wu, J., Winter, C., . . . Amodei, D. (2020). Language Models are Few-Shot Learners. arXiv.org. https://arxiv.org/abs/2005.14165

Bryson, J.J. (2020). The artificial intelligence of the ethics of artificial intelligence: an introductory overview for law and regulation. In: Dubber, M.D., Pasquale, F., Das, S. (eds.) The Oxford Handbook of Ethics of AI. Oxford University Press, New York. https://doi.org/10.1093/oxfordhb/9780190067397.013.1

Bundy J. (2021). When companies say “sorry,” it doesn’t always help their reputation | ASU News. Available: https://news.asu.edu/20210706-discoveries-when-companies-say-sorry-it-doesnt-always-help-their-reputation [2025, January 31]

Calo, R. (2021). Remark at AI debate 2: Artificial intelligence policy: not just a matter of principles. Available: https://www.youtube.com/watch?v=XoYYpLIoxf0 [2025, January 31]

Carlini, N., Athalye, A., Papernot, N., Brendel, W., Rauber, J., Tsipras, D., Goodfellow, I., Madry, A., & Kurakin, A. (2019, February 18). On evaluating adversarial robustness. arXiv.org. https://arxiv.org/abs/1902.06705

Cath, C., Wachter, S., Mittelstadt, B., Taddeo, M., & Floridi, L. (2017). Artificial Intelligence and the ‘Good Society’: the US, EU, and UK approach. Science and Engineering Ethics. https://doi.org/10.1007/s11948-017-9901-7

Chakravorti, B., (2024). AI and machine learning AI’s Trust Problem Twelve persistent risks of AI that are driving skepticism. Available: https://hbr.org/2024/05/ais-trust-problem [2025, Mach 10]

Danks, D., & London, A. J. (2017). Regulating autonomous systems: Beyond standards and tools. IEEE Intelligent Systems, 32(1), 88-91.

Dickson, B. (2018). What is algorithmic bias? Available: https://bdtechtalks.com/2018/03/26/racist-sexist-ai-deep-learning-algorithms [2025, January 31]

Eder, S. (2018). How can we eliminate bias in our algorithms? Available: https://www.forbes.com/sites/theyec/2018/06/27/how-can-we-eliminate-bias-in-our-algorithms [2025, January 31]

Ehsan, U., & Riedl, M. O. (2020). Human-Centered Explainable AI: towards a reflective sociotechnical approach. In Lecture notes in computer science. 449–466. https://doi.org/10.1007/978-3-030-60117-1_33

Eppler, M. J. (2021, July 3). Detecting Data Distortions: The Three Types of Biases every Manager and Data Scientist should know. Available: https://www.linkedin.com/pulse/detecting-data-distortions-three-types-biases-every-manager-eppler [2025, January 31]

European Commission. (2021). Proposal for a Regulation laying down harmonized rules on artificial intelligence (Artificial Intelligence Act). Available: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:52021PC0206 [2025, January 31]

Fjeld, J., Achten, N., Hilligoss, H., Nagy, A., & Srikumar, M. (2020). Principled Artificial Intelligence: Mapping consensus in ethical and Rights-Based approaches to principles for AI. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.3518482

Flemisch, F., Adams, C., Conway, S. R., & Schutte, P. (2003). The H-Metaphor as a guideline for vehicle automation and interaction. ResearchGate. https://www.researchgate.net/publication/24382429_The_H-Metaphor_as_a_Guideline_for_Vehicle_Automation_and_Interaction

Floridi, L., & Cowls, J. (2019). A unified framework of five principles for AI in society. Harvard Data Science Review, 1(1).

Floridi, L., Cowls, J., King, T. C., & Taddeo, M. (2020). How to design AI for social Good: Seven essential factors. Science and Engineering Ethics, 26(3), 1771–1796. https://doi.org/10.1007/s11948-020-00213-5

Gebru, T., Morgenstern, J., Vecchione, B., Vaughan, J. W., Wallach, H., Daumé, H., III, & Crawford, K. (2021). Datasheets for datasets. Communications of the ACM, 64(12), 86–92. https://doi.org/10.1145/3458723

Ge, Y., & Zhu, Q. (2024, April 25). Attributing Responsibility in AI-Induced Incidents: A Computational reflective equilibrium framework for accountability. arXiv.org. https://arxiv.org/abs/2404.16957

Glikson, E. (2020). Human trust in artificial intelligence: Review of empirical research. Academy of Management Annals, 14(2), 627-660. https://doi.org/10.5465/annals.2018.0057

Glinz, D. (2024). Vertrauen als Motor des KI-Wertschöpfungszyklus. In Springer eBooks. 49–75. https://doi.org/10.1007/978-3-658-43816-6_4

Hamm, P., Klesel, M., Coberger, P., & Wittmann, H. F. (2023). Explanation matters: An experimental study on explainable AI. Electronic Markets, 33(1). https://doi.org/10.1007/s12525-023-00640-9

Hassabis, D. (2022). Responsible AI development and implementation [Conference presentation].

Hemmer, P., Schemmer, M., Kühl, N., Vössing, M., & Satzger, G. (2024). Complementarity in Human-AI Collaboration: Concept, Sources, and Evidence. arXiv preprint arXiv:2404.00029.

Hoffman, J.L., Lawson-Jenkins, K., Blum, J. (2006). Trust Beyond Security: An Expanded Trust Model. Communications of the ACM 49 (7), 95-101. https://doi.org/10.1145/1139922.1139924

Hoffmann, C. P., Lutz, C., & Meckel, M. (2014). Digital Natives or Digital Immigrants? The Impact of User Characteristics on Online Trust. Journal of Management Information Systems, 31(3), 138–171. https://doi.org/10.1080/07421222.2014.995538

Holweg, M. (2022). The ethical implications of AI adoption in organizations. Berkeley Technology Law Journal, 37(2), 1-28.

Holweg, M., et al. (2022). The reputational risks of AI. California Management Review. Available: https://cmr.berkeley.edu/2022/01/the-reputational-risks-of-ai/

Holweg, M., Younger, R., & Wen, Y. (2022). The reputational risks of AI. California Management Review. https://cmr.berkeley.edu/2022/01/the-reputational-risks-of-ai/ [2025, January 31]

Horvitz, E. (1999). Principles of mixed-initiative user interfaces. In Proceedings of the SIGCHI conference on Human factors in computing systems the CHI is the limit. 59-166. New York, NY ACM Press. – References – Scientific Research Publishing. (n.d.). https://www.scirp.org/reference/referencespapers?referenceid=79734

Jacovi, A., Marasovi´c, A., Miller, T., Goldberg, Y. (2021). Formalizing trust in artificial intelligence: Prerequisites, causes and goals of human trust in ai. In Proceedings of the 2021 ACM conference on fairness, accountability, and transparency, 624–635. https://doi.org/10.1145/3442188.3445923

Jobin, A., Ienca, M., & Vayena, E. (2019). The global landscape of AI ethics guidelines. Nature Machine Intelligence, 1(9), 389-399.

Kiela, D., Firooz, H., Mohan, A., Goyal, V., Taylor, A., Ferraro, F., … & Yang, Y. (2021). The hateful memes challenge: Detecting hate speech in multimodal memes. Advances in Neural Information Processing Systems, 34, 2611-2624.

Krafft, P. M., Young, M., Katell, M., Huang, K., & Bugingo, G. (2020). Defining AI in Policy versus Practice. In Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society (AIES ’20). Association for Computing Machinery, New York, NY, USA, 72–78. https://doi.org/10.1145/3375627.3375835

Lange, D., Lee, P. M., & Dai, Y. (2010). Organizational Reputation: a review. Journal of Management, 37(1), 153–184. https://doi.org/10.1177/0149206310390963

Lechterman, T. (2023). The Concept of Accountability in AI Ethics and Governance. In Bullock, J. B., Chen, Y., Himmelreich, J., Hudson, V. M., Korinek, A., Young, M. M., & Zhang, B., (eds.), The Oxford Handbook of AI Governance. Oxford University Press. Available: https://philarchive.org/rec/LECTCO-8 [2025, January 31]

Lee, J. D. & See, K. (2004). Trust in Automation: Designing for Appropriate Reliance. Human Factors, 46(1), 50–80. https://doi.org/10.1518/hfes.46.1.50_30392

Levin, M. (2024). AI: A bridge toward diverse intelligence and humanity’s future. Tufts University Working Paper. https://doi.org/10.31234/osf.io/ez263

Lewis, P., Perez, E., Piktus, A., Petroni, F., Karpukhin, V., Goyal, N., Küttler, H., Lewis, M., Yih, W., Rocktäschel, T., Riedel, S., & Kiela, D. (2020). Retrieval-Augmented Generation for Knowledge-Intensive NLP tasks. https://proceedings.neurips.cc/paper/2020/hash/6b493230205f780e1bc26945df7481e5-Abstract.html

Liu, P., Yuan, W., Fu, J., Jiang, Z., Hayashi, H., & Neubig, G. (2022). Pre-train, Prompt, and Predict: A systematic survey of prompting methods in natural language processing. ACM Computing Surveys, 55(9), 1–35. https://doi.org/10.1145/3560815

Lukyanenko, R., Maass, W. & Storey, V. C. (2022). Trust in artificial intelligence: From a Foundational Trust Framework to emerging research opportunities. Electronic Markets, 32(4), 1993–2020. https://doi.org/10.1007/s12525-022-00605-4

Makovi, K., Sargsyan, A., Li, W., Bonnefon, J., & Rahwan, T. (2023). Trust within human-machine collectives depends on the perceived consensus about cooperative norms. Nature Communications, 14(1). https://doi.org/10.1038/s41467-023-38592-5

McNamara, A., Smith, J., Murphy-Hill, E. (2018). Does ACM’s code of ethics change ethical decision making in software development? In: Proceedings of the 2018 26th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, 729–733. https://doi.org/10.1145/3236024.3264833

Mittelstadt, B. D., Allo, P., Taddeo, M., Wachter, S., & Floridi, L. (2016). The ethics of algorithms: Mapping the debate. Big Data & Society, 3(2).

Mitchell, M., Wu, S., Zaldivar, A., Barnes, P., Vasserman, L., Hutchinson, B., & Gebru, T. (2019). Model cards for model reporting. Proceedings of the Conference on Fairness, Accountability, and Transparency (FAT), 220-229. https://doi.org/10.1145/3287560.3287596

Mökander, J., & Floridi, L. (2022). Operationalising AI governance through ethics-based auditing: an industry case study. AI And Ethics, 3(2), 451–468. https://doi.org/10.1007/s43681-022-00171-7

Morley, J., Floridi, L., Kinsey, L., & Elhalal, A. (2019). From What to How: An Initial Review of Publicly Available AI Ethics Tools, Methods and Research to Translate Principles into Practices. Science and Engineering Ethics, 26(4), 2141–2168. https://doi.org/10.1007/s11948-019-00165-5