Chapter 1: Learn how pervasive consumer concerns about data privacy, unethical ad-driven business models, and the imbalance of power in digital interactions highlight the need for trust-building through transparency and regulation.

Chapter 8: Learn how AI’s rapid advancement and widespread adoption present both opportunities and challenges, requiring trust and ethical implementation for responsible deployment. Key concerns include privacy, accountability, transparency, bias, and regulatory adaptation, emphasizing the need for robust governance frameworks, explainable AI, and stakeholder trust to ensure AI’s positive societal impact.

In the digital age, personal data has evolved from mere information traces to a critical economic asset (Zuboff, 2019). Targeted advertising has emerged as the dominant business model, transforming personal information into a lucrative commodity (Van Dijck, 2014). The rise of AI and machine learning has further intensified data collection practices, as these technologies require extensive training datasets (LeCun et al., 2022; Brynjolfsson & McAfee, 2017).

Companies now face a complex balancing act: gathering sufficient data for AI development while maintaining user trust and ethical standards (Dignum, 2021). The emergence of privacy-preserving AI techniques, such as federated learning and differential privacy, offers potential solutions. Yet, the fundamental challenge of fair value distribution remains unresolved (Pasquale, 2020). Yet, AI is redefining the conventional marketing landscape (King, 2019).

This dynamic is further complicated by recent regulatory developments, including the EU AI Act and enhanced data protection frameworks (Veale & Borgesius, 2021). These regulations attempt to address the growing power asymmetry between data collectors and individuals, while ensuring AI innovation can continue responsibly (Crawford, 2021).

Data monetization has become the cornerstone of digital business models, yet privacy protection mechanisms and regulatory frameworks struggle to match evolving user expectations (Acquisti et al., 2016). Research indicates that users are generally willing to share data when given appropriate safeguards and control mechanisms (Calo, 2013; Solove, 2021). However, the digital ecosystem often forces users to accept opaque data practices to access essential services (Zuboff, 2019).

This “privacy paradox” is exacerbated by asymmetric power relationships between platforms and users (Nissenbaum, 2020). Users face a false choice between digital participation and privacy protection, undermining genuine consent and trust-building efforts (Richards & Hartzog, 2019). The emergence of AI systems has further complicated this dynamic, requiring unprecedented amounts of personal data for training and optimization (Floridi, 2022).

On the other hand, digital transformation has significantly enhanced consumer agency through increased connectivity and technological literacy (Jenkins & Deuze, 2022). However, this empowerment has led to decreased brand loyalty and more volatile consumer behaviour (Kumar & Shah, 2019). The evolution of data-driven business models must now prioritize authentic trust-building over mere data collection (Zuboff, 2019).

Like an iceberg in digital waters, trust remains largely invisible yet fundamentally critical – its mismanagement or oversight can lead to catastrophic consequences for organizations (Botsman, 2021). This shift necessitates a fundamental reimagining of digital marketing strategies, moving beyond targeted advertising toward genuine value creation (Kotler et al., 2021). Organizations must develop transparent data practices and reciprocal relationships with users to establish sustainable digital trust (Richards & Hartzog, 2019).

Explosive growth of data

The digital economy drives explosive data growth, with its true impact lying in the insights derived by organizations from this expanding resource (Zuboff, 2019). This dynamic is amplified by Metcalfe’s Law, which states that a network’s value grows proportionally to the square of its connected users (Metcalfe, 2013).

Following Moore’s law – valid as of 2015 – processing power doubles every 18 months. Modern data processing has evolved beyond traditional Hadoop systems to embrace cloud-native architectures, serverless computing, and edge processing (Singh & Singh, 2023). Technologies like Apache Spark, GraphQL, and real-time stream processing enable sophisticated data analytics at unprecedented scales (Zaharia et al., 2016).

However, this creates a selective visibility challenge: organizations must determine which data merits analysis. This “flashlight effect” restricts insights into the direction of analytical focus (Pentland, 2019). Increasing processing power and network effects lead to rising system complexity, necessitating decentralized control mechanisms (Helbing, 2015). While today’s digital economy exhibits monopolistic characteristics, organizational changes like Google’s Alphabet might signal a shift toward decentralized, self-regulating systems (Parker et al., 2020).

Digital transformation has been a gradual process with often modest initial impact (Vial, 2019). This is changing as processing power, data growth, and network effects converge to accelerate transformation (Parker et al., 2020).

The digital revolution is poised to transform industries by shifting economic focus toward the information sector (Schwab, 2019). According to the Three-sector theory, a significant workforce migration occurs from manufacturing and services to the quaternary, knowledge-based sector (Kenessey, 2021). This transition implies that repetitive, procedure-based tasks will increasingly be automated through artificial intelligence and advanced computing systems (Brynjolfsson & McAfee, 2017).

The shift demands rapid adaptation from both employees and organizations as traditional roles evolve or become obsolete (Frey & Osborne, 2017). More critically, governmental regulatory frameworks must evolve to accommodate this sectoral transformation. New infrastructure and regulatory mechanisms are essential to harness the digital revolution’s potential. Data privacy regulation emerges as a key requirement for this transition, with trust serving as a fundamental driver for societal self-organization (Zuboff, 2019). The success of this transformation depends heavily on establishing robust governance frameworks that balance innovation with social protection (Parker et al., 2020).

Targeted advertising dominates digital business models, leveraging extensive user data for profiling and personalized marketing. Users routinely have their data mined by “free” services, with the true value of personal data often neither considered nor adequately compensated (Acquisti et al., 2016). This model enables highly effective advertising through comprehensive user profiling and targeting (Cohen, 2019).

Consumers increasingly recognize the worth of their personal data and the associated privacy risks (see section “Privacy at Risk“). However, most remain unaware of specific data collection practices and usage purposes, exacerbated by insufficient transparency in information practices (Burkell, 2020). Users frequently face inadequate privacy protections and must blindly trust in fair data processing (please refer to statistics at the end of the chapter).

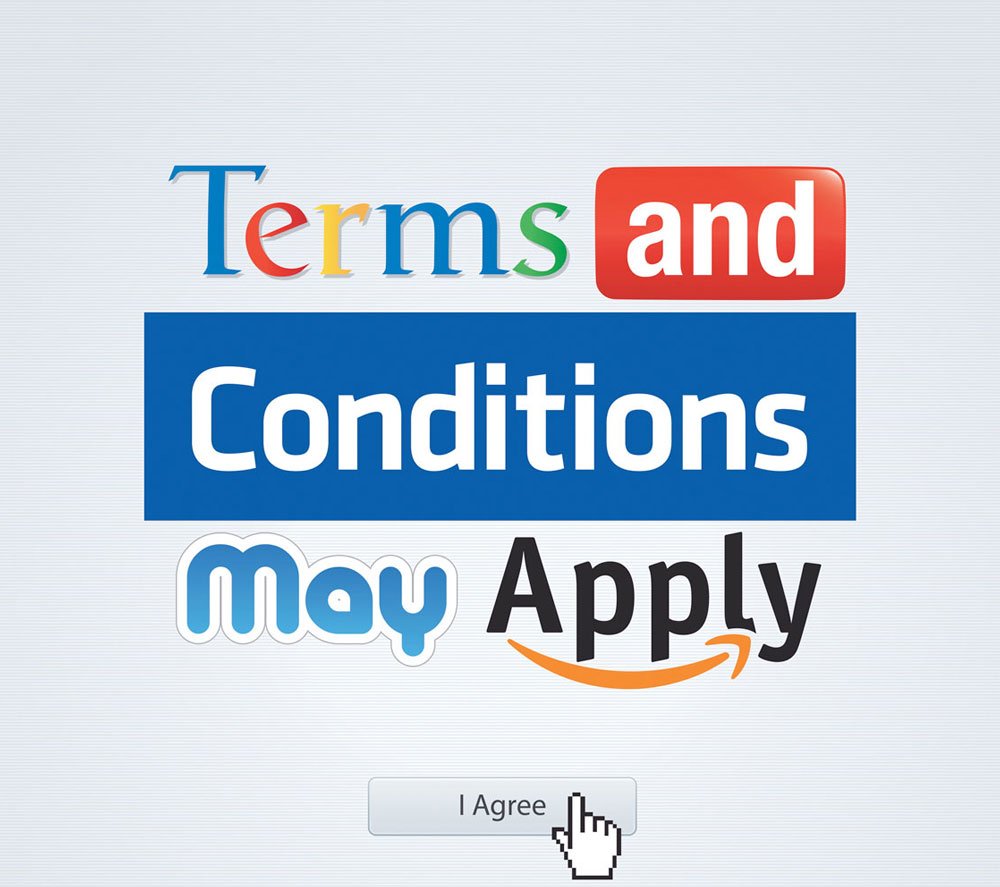

Service access typically requires accepting terms and conditions, with no alternative except service denial. Studies, including recent investigations (TV documentation tacma.net, 2013), show that individuals rarely read these terms before accepting them, undermining informed consent principles (Richards & Hartzog, 2019). Though crucial for building trusted relationships and enabling user-centric data management, this consent mechanism remains fundamentally compromised under current practices.

Whilst in earlier times control over personal data may have been undertaken by preventing the data from being disclosed, in an internet enabled society it is increasingly important to understand how disclosed data is being used and reused and what can be done to control this further use and reuse (Whitley 2009, 155)

This “balancing act” of competing business interests and privacy requirements will be increasingly important. While data collection becomes ubiquitous across industries, initiatives beyond targeted advertising carry significant reputational risks (Pentland, 2020). The contrast between Google’s “Don’t be evil” motto and Apple’s privacy-focused positioning illustrates divergent approaches to data governance (Searls, 2021).

Brand trustworthiness increasingly hinges on data practices, as exemplified by public reactions to Apple’s HealthKit and similar data-driven initiatives (Martin & Murphy, 2017). This evolving landscape challenges traditional assumptions about the relationship between data collection and brand perception (Nissenbaum, 2020).

Today’s add-centric business models will further evolve and keep creating interesting innovations. Automation-driven transformation represents a fundamental shift in how businesses create and deliver value through data exploitation (Parker et al., 2020). Recent developments share two key characteristics: they replace traditional human-based methods and heavily depend on user data collection and analysis (Singh & Hess, 2020):

The Facebook Like button, introduced in February 2009 and generating over 3.2 billion daily clicks, exemplifies privacy concerns in digital engagement. Despite Zuckerberg praising it as their most iconic innovation, its tracking capability – active regardless of user interaction- has raised significant privacy issues (Cohen, 2021). Modern equivalents like Instagram’s “Save” and TikTok’s “Bookmark” features continue this controversial practice of tracking across websites (Singh & Hess, 2022), potentially violating European and Swiss data protection regulations under GDPR standards (Regulation (EU) 2016, 679).

Shared endorsements represent a very most popular form of targeted advertising. Social networking platforms such as Facebook and Google pull the users’ likes, +1s, and reviews (e.g., from Google Maps and Google Play) and turn them into advertisements for your connections. Ads often display the user’s profile picture to increase the effect of a personal recommendation. This form of advertising is highly questionable because users don’t know when or how these endorsements are actually displayed. By understanding and agreeing to the terms and conditions, users only know that this can and will happen sometimes and somehow. Use these links to personally opt out of shared endorsements on Google and Facebook.

Another improvement in online advertising technology is Programmatic Buying. This concept automates current online advertising processes. It goes beyond Real-Time Bidding, which allows selling advertising spots to the highest offer in an auction system. With Real-Time Bidding, a buyer can set and adjust parameters such as bid price and network reach. A programmatic buy will layer these parameters with behavioral or audience data, all within the same platform (read more). This innovation will increase the importance of ad networks and, in turn, intensify data-tracking efforts.

Advanced direct marketing technology enables serving ads and other messages individually. Communication is becoming ever more targeted. If an initial attempt to deliver a message is not successful, companies will have another try by re-targeting the same individual on the next occasion. Consumers will only receive adverts that are nuanced to reflect how they see the world. This set of innovations, when combined with advanced analytics, will have unintended side effects. They can lead to a filter bubble (users become separated from information that disagrees with their viewpoints, effectively isolating them in their own cultural or ideological bubbles) and even help questionable political candidates win elections.

Facial emotion recognition using neural networks has emerged as a powerful tool in marketing research, enabling businesses to gain deeper insights into consumer behaviour and preferences (Ekman & Friesen, 1971; Efremova et al., 2019). Marketers can assess emotional reactions in real-time by analyzing facial expressions in response to advertisements, products, or brand messaging, providing valuable feedback for product development and campaign optimization (Tian et al., 2018). For example, some retailers have experimented with using cameras to analyze customer emotions in-store. This data could be used to optimize store layouts, product placement, and customer service interactions.

Meta’s practice of delivering micro-targeted advertisements to users based on data extracted from their profiles led to criticism in the early days of social media. It enabled the platform to predict purchasing behaviour and get higher prices for advertising space. Although these actions remain within legal boundaries, stakeholder reactions suggest strong disapproval of how Meta leverages personal data to serve its own profit-driven objectives, effectively turning users’ information against them.

Recent digital marketing innovations reflect a dual focus on personalization and privacy protection. AI-driven tools like OpenAI’s GPT Store enable customized customer interactions, while Meta’s Advantage+ and Google’s Performance Max campaigns use machine learning for automated ad optimization (Goldfarb & Tucker, 2019). These developments have transformed traditional targeted advertising into more sophisticated, AI-powered engagement systems.

Social commerce innovations have introduced real-time engagement metrics through platforms like TikTok Shop and Instagram’s collaborative collections, fundamentally changing how brands build social proof. Simultaneously, privacy-focused innovations such as Google’s Topics API and Apple’s SKAdNetwork attempt to balance personalization with user privacy protection (Marting & Murphy, 2017).

The way companies handle their customers’ data significantly influences how customers perceive the organization’s character. This perception of character is crucial when evaluating the trustworthiness of an AI service provider.

Character is like a tree and reputation like its shadow. The shadow

is what we think of it; the tree is the real thing (Abraham Lincoln)

Add-centric business models with its mechanisms for behavioral tracking and aggregation of personal data result in asteady erosion of privacy and therefore provoke pervasive customer concerns. It is mandatory to critically rethink digital business models and to eventually include a realistic value proposition for the use of personal data. Personal data will continue to be recognized as a source of macroeconomic growth. Therefore, it is critical to find solutions that can both protect privacy and unlock value.

Organizations often face strong temptations to leverage all the data at their disposal, regardless of whether users have provided consent – “data creep”-, or to overlook the lack of explicit customer approval for specific uses of that data – “scope creep” – (Holweg et al. 2022).

Tristan Harris – a prominent technology ethicist, former Google design ethicist, and co-founder of the Center for Humane Technology – argues that technology has already reached a critical point, systematically exploiting human psychological vulnerabilities (2019). He contends that the tech industry’s preoccupation with a future technological “singularity” distracts from the more pressing issue of current technological manipulation.

Harris’s concerns remain pertinent in the era of artificial intelligence. As a formidable general-purpose technology, AI presents a myriad of ethical dilemmas. A central issue is the inherent duality of technology, exemplified by the balance between its positive and negative impacts. In a discussion produced by Bloomberg Technology with Google engineer Blake Lemoine and anchor Emily Chang, Alphabet CEO Sundar Pichai seeks to articulate his company’s approach to maximizing the benefits of AI while minimizing its potential drawbacks (2022).

Social media and recommendation systems strategically target human psychological weaknesses, including anxieties about social validation, comparison, and status. Artificial intelligence exacerbates this problem through sophisticated profiling and predictive modelling that anticipates and exploits predictable human cognitive biases. These technological mechanisms create compulsive engagement with digital platforms, trapping users in cycles of addiction across social media, streaming services, and personal devices. The emerging threat of deepfake technologies further amplifies the potential for psychological manipulation. Harris suggests that the real technological danger is not a future hypothetical scenario, but the current systematic undermining of human cognitive resilience. By designing technologies that prey on psychological vulnerabilities, tech companies effectively engineer addiction and diminish human agency. This approach transforms digital platforms from tools of empowerment to mechanisms of psychological control, fundamentally altering human behaviour and perception.

The described innovations in digital marketing indicate that privacy of online users is at risk. In fact, the word privacy in combination with online user activity is misleading. There is no such thing as true Internet anonymity and therefore, true privacy is a myth as well. A good understanding of current online analytics practices and their direction of development is required to understand the extent of risk an Internet user takes when participating in online transactions.

Analytics represents the systematic practice of data capture, management, and analysis to drive business strategy and performance (Chen et al., 2012). Modern organizations progress through distinct maturity levels, each offering increasing sophistication in decision-making capabilities (Davenport & Harris, 2017).

At the foundational level, descriptive analytics provides historical insights through traditional management reporting and ex-post analysis of structured data (Hess et al., 2016). The next level, diagnostic analytics, enables deeper understanding through pattern recognition and root-cause analysis (Brynjolfsson & McAfee, 2017).

Real-time analytics, widely adopted by digital businesses, enables immediate monitoring and response, particularly in social media sentiment analysis and customer behaviour tracking (Kumar & Shah, 2009). At the highest maturity level, predictive analytics employs AI-driven simulations, advanced modelling, and optimization algorithms to forecast future trends and outcomes (Chen et al., 2012).

The obvious fact that analytics capabilities become more and more powerful underlines the hypothesis that true privacy on the Internet doesn’t exist – any more. Despite privacy guarantees, modern data mining techniques enable individual re-identification through sophisticated pattern analysis and data point correlation (Narayanan & Shmatikov, 2008).

By leveraging data-driven insights, artificial intelligence is revolutionizing the marketing landscape, enabling marketers to discern relationships between customers and products accurately. User profiling has reached unprecedented levels of granularity, analyzing everything from keyboard dynamics to geolocation data (Acquisti et al., 2016). For instance, insurance companies now analyze typing patterns and hesitation moments to assess customer behaviour, while seemingly anonymized datasets can be de-anonymized through cross-referencing location data and behavioural patterns (Taylor, 2017). Behavioural psychologists and data scientists have developed advanced methods to create detailed individual profiles from digital footprints, raising significant privacy concerns (Chen & Cheung, 2018). The combination of AI-driven analytics and vast data collection makes true anonymity increasingly difficult to maintain in the digital sphere.

A mixed team from Cambridge University and Stanford University developed an engaging personality test which predicts a user’s personality based on Facebook likes. Michal Kosinski and his colleagues even claim that computers are more accurate than humans in judging others’ personalities (Wu et al. 2015). If you own an active Facebook account, feel free to take this personality test yourself. The test uses a snapshot of your digital footprint to visualize how others perceive you online. You may take additional psychometric tests for self-perception to assess the accuracy of this test.

However, applications like Cambridge’s Magicsauce inherently carry the risk of violating social norms and values. A notable case is the 2018 Cambridge Analytica scandal, which thrust Facebook into a major crisis.

This incident highlights a growing trend: as companies increasingly rely on AI to enhance the efficiency and effectiveness of their products and services, they also open themselves up to new risks and controversies tied to its use. When AI systems breach societal norms and values, organizations face significant threats, as even a single incident can inflict long-lasting damage on their reputation. Read more about reputation and its risks in Eccles et al.’s 2007 paper. In an era where reputation functions as currency (Kleber, 2018), it’s no surprise that organizations derive significant intangible value from their standing. Companies with positive reputations are primarily viewed as delivering greater value from the customers’ perspective.

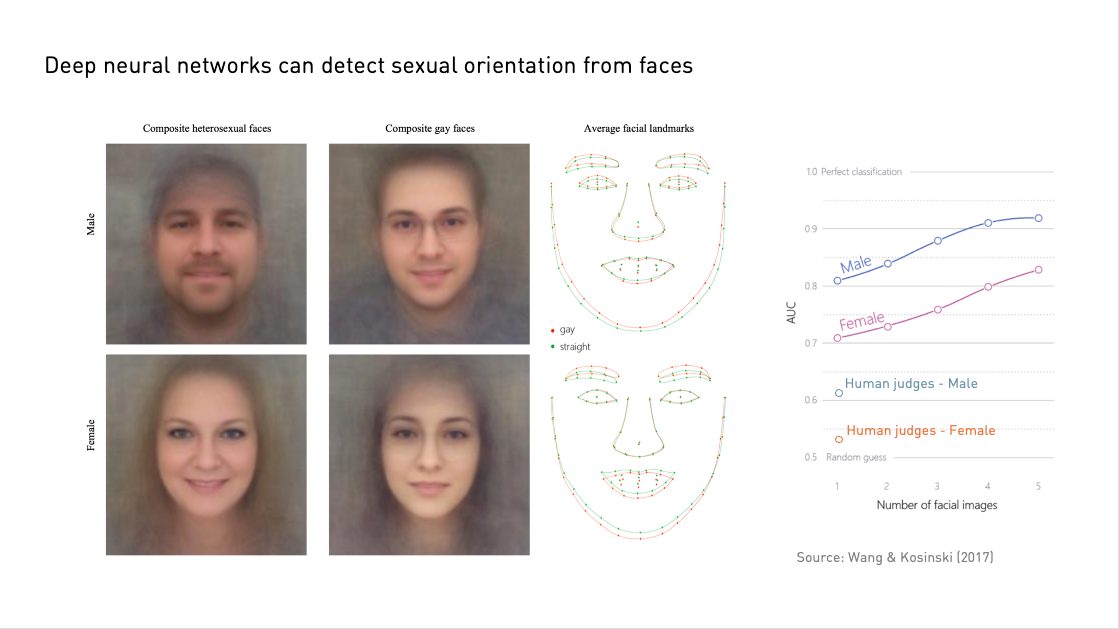

The image on the right shows how Kosinski’s model imagines (or dreams) a stereotypically introverted and extroverted female. Here, features are actually recognizable (glasses vs. made-up eyes, bleached vs. dark hair, etc.). His results on the model’s idea of typical appearance, indicating a person’s sexual orientation, are illustrated in the second picture (Wang & Kosinski, 2018).

These examples complement the topics of perception and creativity of AI systems with the equally exciting and relevant dimension of ethics. How to identify and mitigate such risks is discussed in the last chapter on iceberg.digital.

Let’s play a game: The author’s portrait challenge.

AI, especially generative models such as Generative Adversarial Networks (GANs), excels at image transformation by learning to recognize patterns in large datasets. These models generate realistic images by mimicking natural patterns in data. For example, when trained on human faces, GANs can generate convincing images of faces, capturing complex features such as expressions and lighting (Goodfellow et al., 2014). Image classification reached human-level performance in 2016 – refer to the figure by Bengio, 2025, in Chapter 8.

One of the most notable applications of this technology is deepfakes – AI-generated images and videos that convincingly alter reality. These deepfakes can replicate natural features, such as how teeth appear when smiling, making it difficult for humans to distinguish real from fake images (Chesney & Citron, 2019). As AI continues to evolve, these generated images become increasingly difficult to detect, posing a potential risk of misinformation (Korshunov & Marcel, 2018). While the advancements in AI-generated content are impressive, they raise significant ethical concerns. Deepfakes and other AI-generated content can be used maliciously, making it essential to develop safeguards for detection and responsible use (Nguyen et al., 2020).

Online privacy is at risk. As a natural reaction to the described developments, consumer concerns regarding the provision of personal data are increasing. However, the motivation of online businesses to protect users’ privacy and advocate for customers is often surprisingly low. It is usually limited to pursuing objectives in marketing, such as avoiding reputation risks. Businesses have to change their attitude to be successful in the future. Privacy represents a merit good characterized by incomplete market dynamics that necessitate regulatory intervention (Cohen, 2021). The classification of privacy as a merit good – where market forces alone cannot ensure optimal provision – underscores the essential role of robust regulatory frameworks in protecting consumer interests.

Customers face a diverse bouquet of risks regarding the use of their data. As we will learn, drawing on the prospect theory in Chapter 3, the user’s perception of these risks strongly influences their decision-making online. While the probability of their occurrence is perceived as quite low, the potential impact of these privacy risks is grave. Therefore, quantifying perceived risk depends on two major components: the effect of a certain transaction and the perceived uncertainty about the likelihood of occurrence of a transaction result (Plötner, 1995). Hence, trust is a function of risk and relevance. When facing a highly relevant interaction target, a user’s level of trust is supposed to rise with increasing risk (Koller, 1997). The goal of getting well lets a patient develop a high level of trust in a surgeon.

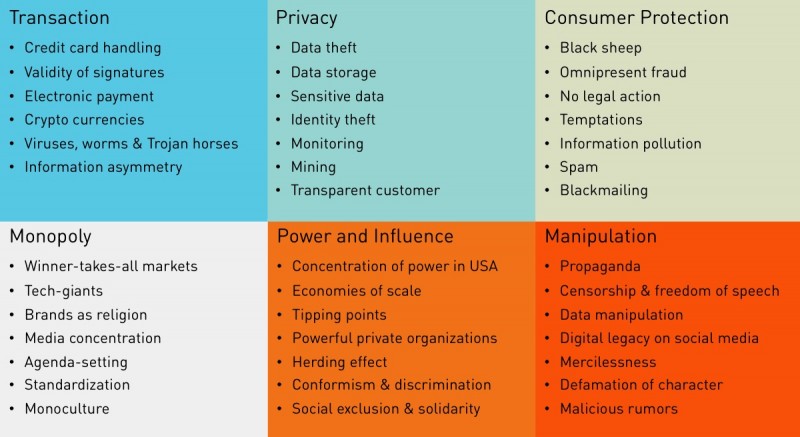

Next to a list of personal risks, the discussion about using personal data also leads to diverse superordinates, often ethical risks. The groundbreaking success of electronic commerce and the emergence of disruptive technologies imply new types of risk.

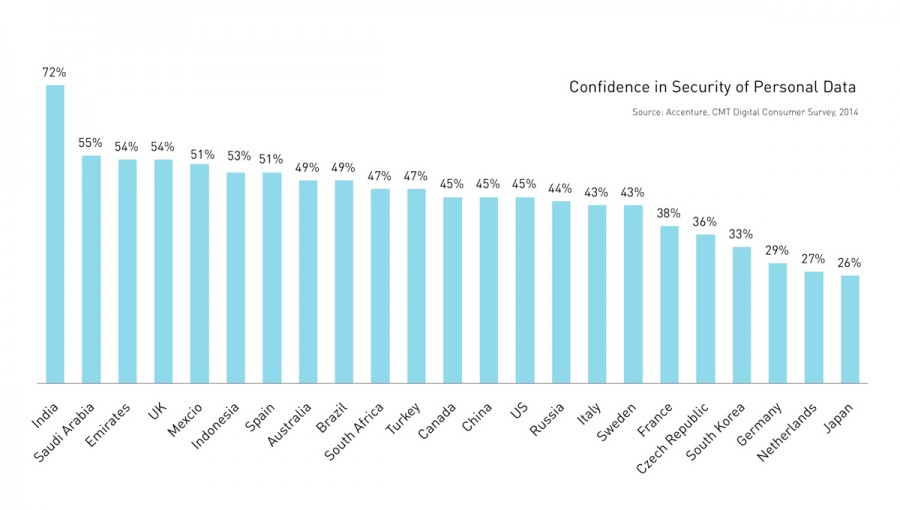

As we are going to learn in chapter 3 “Understanding Digital Trust”, the disposition to trust and therefore the acceptability of data use strongly depend on cognitive constructs that are individual to the trusting person. Interestingly, variances in these dispositions can also be observed on an aggregated level. Broad research indicates significant differences in the acceptability of data use and in confidence in the security of personal data across countries. A recent study by Accenture shows that India is likely the country with the highest confidence in the use of personal data, whereas Japan is very reluctant to build trust (Accenture, 2014). Other studies emphasize that these differences between cultures also depend heavily on the context of data use (WEF, 2014). China and India are countries that tend to trust digital service providers more than the USA or Europe – even without knowledge of data use, the service provider itself, and the underlying value proposition.

Data privacy is a concern for consumers.

A compelling survey conducted by Dassault Systems in collaboration with independent research firm CITE (2020) revealed that data privacy is a concern for 96% of consumers.

Consumers, particularly younger ones, seek experiences where personalization serves as the key differentiator.

A concern for almost all consumers

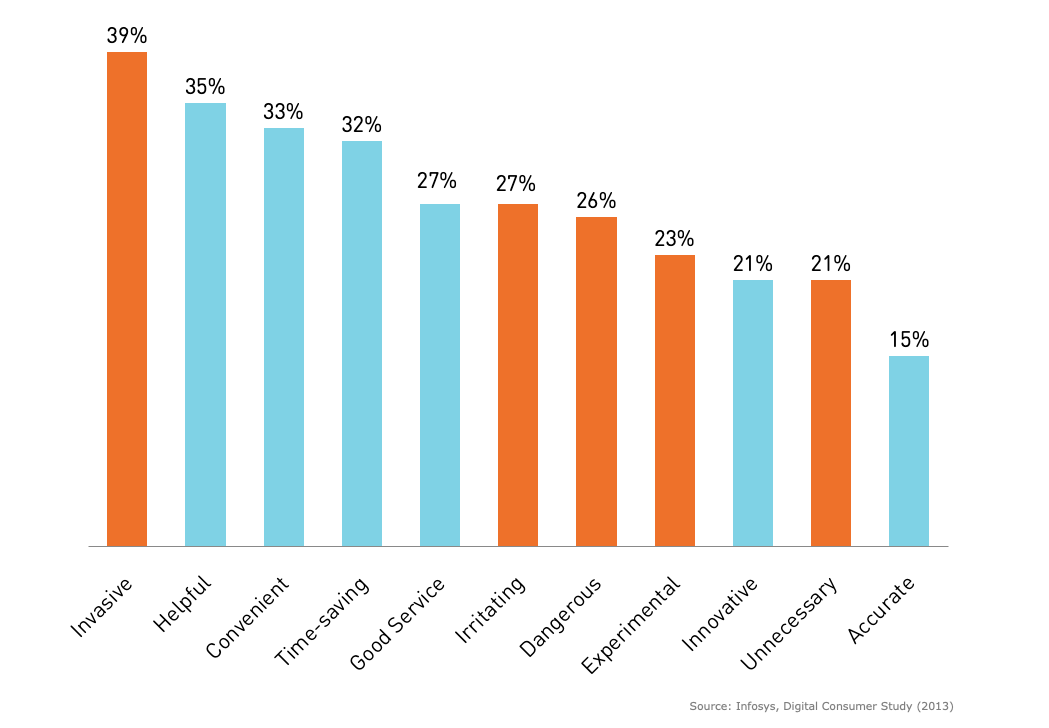

Consumers have mixed feelings about organizations analyzing data to provide more customized experiences. Infosys finds that ‘Invasive’ is the term consumers most often use to describe this, though the following four most often selected terms are all positive (2013). No attribute emerges as the most popular, showing no consensus on how consumers view Big Data in this context.

The study targeting Australian shoppers further revealed that 83% of participants would be likelier to make repeat purchases from retailers providing tailored promotional offers. Despite this openness, a significant portion of consumers find current advertisements irrelevant, with 77% of mobile ads, 74% of online ads, and 71% of email promotions failing to resonate. While 81% of consumers are comfortable sharing their email addresses and 67% their postcodes, only 32% would share their date of birth, and a mere 9% would disclose their income.

Globally, 39% of consumers view data mining as invasive, though many also find it helpful (35%), convenient (32%), and time-saving (33%). In the United States, concerns about invasiveness are lower (30%) than in other regions, whereas German consumers are among the least willing to share personal data.

Within the scope of a research project on big data and privacy issues, the White House solicited public input via a short web survey (White House, 2014). Although these results cannot be considered a statistically representative survey, they still reflect general attitudes toward data privacy. Respondents expressed surprisingly strong concern about big data practices. Most significant are strong feelings around ensuring that data practices are transparent and controlled through legal standards and oversight.

The pie charts below indicate how many percent of the respondents are very much concerned about the particular aspect of data practice:

Legal standards & oversight

Transparency about data use

Data storage & security

Collection of location data

With all the outrageous disclosures about practices of the NSA and other governmental institutions, it is not surprising that the majority of respondents to the survey of the White House don’t trust intelligence as well as law enforcement agencies “not at all”. On the positive side, majorities were generally trusting of how professional practices (such as law and medial offices) and academic institutions use and handle data.

The progress bars indicate how many percent of the respondents don’t trust each entity at all:

These findings illustrate that businesses are asked to act. Privacy concerns are likely to increase with the economy’s ever-growing appetite for data. Building a trusted relationship with consumers will be key. In order to reach this goal, companies must provide a clear value proposition (please refer to details about the trust clue “reciprocity” in chapter 3). The digital economy has very good chances to eventually move closer to their customer base. The study from Accenture also shows that about two-thirds of consumers globally are willing to share additional personal data with digital service providers in exchange for additional services or discounts (Accenture, 2014). However, sharing personal data with third parties remains a critical pitfall for engendering trust.

Willingness to share additional personal data in exchange for additional services or discounts:

Personalization expectations vary across generations. Generation X, millennials, and Generation Z are more willing to share their data for enhanced personalization and are more likely to recognize the benefits it offers, such as improved personal safety, time savings, and financial advantages, compared to baby boomers and the silent generation.

According to the Dassault Systems study, consumers are, on average, willing to pay 25.3% more for personalized experiences. However, in exchange for sharing their data, they expect an average savings of 25.6%. They show a higher willingness to pay for personalization in healthcare, whereas interest in personalized retail is comparatively lower. Note that consumers have a mixed definition of personalization.

Most consumers are only willing to share data they have explicitly consented to for personalization. However, anonymizing data increases the likelihood of sharing for six out of ten respondents. Notably, 88% would stop using a helpful personalized service if they feel uncertain about how their data is being managed.

of consumers said they will not purchase from organizations they don’t trust with their personal data (Cisco, 2019)

of American users chose not to use a product or service due to worries about how much personal data would be collected about them (Pew Research Center, 2020).

of users have terminated relationships with companies over data, up from 34% only two years ago (Cisco, 2022)

left social media companies and 28% left Internet Service Providers (Cisco, 2021)

Navigating the Paradox and Dilemma

In the presented survey research across multiple studies and demographic groups, empirical data consistently points to the emergence of an apparent “Privacy Paradox” and “Data Collection Dilemma”, revealing significant discrepancies between individuals’ privacy attitudes and their actual online data-sharing behaviours.

The Privacy Paradox describes the inconsistency between individuals’ expressed privacy concerns and their actual online data-sharing behaviours.

The Data Collection Dilemma highlights the systemic challenge of organizations continuously collecting and monetizing personal data without meaningful user understanding or comprehensive consent.

The Privacy Paradox describes the discrepancy between individuals’ privacy concerns and their actual online behaviour, where people express significant anxiety about data privacy yet consistently engage in practices that compromise their personal information (Acquisti & Grossklags, 2005). This phenomenon reveals a complex psychological mechanism where users’ immediate desires and convenience often override their long-term privacy considerations, leading to seemingly contradictory actions.

Empirical research shows that although individuals report high levels of privacy concerns, they frequently share personal data on digital platforms with little hesitation (Norberg et al., 2007). Factors contributing to this paradox include the psychological distance from potential privacy risks, the perceived benefits of digital services, and the immediate gratification of online interactions that outweigh abstract future privacy concerns. Acquisti et al. (2016) argue that cognitive biases, such as present bias and limited information-processing capacity, significantly contribute to this paradoxical behaviour. Users often lack a comprehensive understanding of data collection mechanisms and potential long-term consequences of their digital interactions, further exacerbating the disconnect between privacy attitudes and actions.

The privacy paradox highlights the need for more nuanced approaches to digital privacy, including improved user education, transparent data practices, and design strategies that make privacy consequences more tangible and immediate for users (Taddicken, 2014). Understanding this complex psychological phenomenon is crucial for developing more effective privacy protection mechanisms in an increasingly digital world.

The “Data Collection Dilemma” emerges as a critical extension of the privacy paradox, illustrating the complex tensions between data collection practices and individual privacy concerns (Lyon, 2014). Organizations and digital platforms continuously collect extensive personal data, leveraging sophisticated algorithms to track user behaviors, preferences, and interactions across digital ecosystems.

Scholars like Zuboff (2019) characterize this phenomenon as “surveillance capitalism,” in which personal data is treated as a valuable commodity, traded and monetized without explicit user comprehension or meaningful consent. Despite growing awareness of invasive data collection mechanisms, users frequently surrender personal information in exchange for convenient digital services, reflecting the fundamental contradictions inherent in contemporary digital interactions. The dilemma is further complicated by opaque data processing techniques, where complex algorithmic systems transform collected data into predictive insights that extend far beyond users’ initial interactions.

Regulatory frameworks like GDPR and CCPA aim to address these challenges by mandating transparency and user consent, yet technological evolution continues to outpace legislative efforts to protect individual privacy (Bygrave, 2017). Understanding the data-collection dilemma requires recognizing the intricate interplay among technological capabilities, economic incentives, and personal psychological responses to digital surveillance.

References Chapter 1:

Accenture. (2025). New Age of AI to bring unprecedented autonomy to business (n.d.). Available: https://www.accenture.com/content/dam/accenture/final/accenture-com/document-3/Accenture-Tech-Vision-2025.pdf [2025, January 31]

Acquisti, A., & Grossklags, J. (2005). Privacy and rationality in individual decision making. IEEE Security & Privacy, 3(1), 26–33. https://doi.org/10.1109/MSP.2005.22

Acquisti, A., Taylor, C., & Wagman, L. (2016). The economics of privacy. Journal of Economic Literature, 54(2), 442–492. https://doi.org/10.1257/jel.54.2.442

Bloomberg Technology. (2022). Google engineer on his sentient AI claim [Video]. YouTube. https://www.youtube.com/watch?v=kgCUn4fQTsc

Boston Consulting Group. (2025). BCG AI RADAR: From potential to profit: Closing the AI impact gap. Available: https://web-assets.bcg.com/0b/f6/c2880f9f4472955538567a5bcb6a/ai-radar-2025-slideshow-jan-2025-r.pdf [2025, January 31]

Botsman, R. (2021). Trust in the digital age: How to build credibility in a rapidly changing world. Public Affairs Quarterly, 35(2), 143–159.

Brynjolfsson, E., & McAfee, A. (2017). The business of artificial intelligence. Harvard Business Review, 95(4), 3-11.

Brynjolfsson, E., & McAfee, A. (2022). The future of AI and power. Foreign Affairs, 101(4), 16–26.

Burkell, J. (2020). The limits of privacy literacy. Policy & Internet, 12(2), 185–207. https://doi.org/10.1002/poi3.237

Bygrave, L. A. (2017). Data privacy law: An international perspective. Oxford University Press.

Calo, R. (2013). Digital market manipulation. George Washington Law Review, 82, 995–1051.

Chen, H., Chiang, R. H., & Storey, V. C. (2012). Business intelligence and analytics: From big data to big impact. MIS Quarterly, 36(4), 1165-1188.

Chen, Y., & Cheung, C. M. (2018). Privacy concerns about digital footprints. Journal of the Association for Information Science and Technology, 69(1), 22-33.

Chesney, R., & Citron, D. K. (2019). Deep fakes: A looming challenge for privacy, democracy, and national security. California Law Review, 107(5), 1753–1819. https://doi.org/10.2139/ssrn.3213954

Cisco. (2021). Building Consumer Confidence Through Transparency and Control. Available: https://www.cisco.com/c/dam/en_us/about/doing_business/trust-center/docs/cisco-cybersecurity-series-2021-cps.pdf [2025, January 31]

Cisco. (2022). Data Transparency’s Essential Role in Building Customer Trust. Available: https://www.cisco.com/c/dam/en_us/about/doing_business/trust-center/docs/cisco-consumer-privacy-survey-2022.pdf [2025, January 31]

Cisco. (2024). Cisco 2024 Consumer Privacy Survey. Available: https://www.cisco.com/c/en/us/about/trust-center/consumer-privacy-survey.html [2025, January 31]

Cohen, J. E. (2019). Between truth and power: The legal constructions of informational capitalism. Oxford University Press.

Crawford, K. (2021). Atlas of AI: Power, politics, and the planetary costs of artificial intelligence. Yale University Press

Davenport, T. H., & Harris, J. G. (2017). Competing on analytics: The new science of winning (Updated ed.). Harvard Business Review Press.

Dignum, V. (2021). The role of AI in society and the need for trustworthy AI. Ethics and Information Technology, 23(4), 695–705.

Eccles, R., Newquist, S., & Schatz, R. (2007). Reputation and its risks. Harvard Business Review, 85, 104–114, 156. Retrieved from https://hbr.org/2007/01/reputation-and-its-risks

Efremova, N., Patkin, M., & Sokolov, D. (2019). Face and Emotion Recognition with Neural Networks on Mobile Devices: Practical Implementation on Different Platforms. 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG), 1-8. https://doi.org/10.1109/FG.2019.8756562

Ekman, P., & Friesen, W. V. (1971). Constants across cultures in the face and emotion. Journal of Personality and Social Psychology, 17(2), 124–129.

Floridi, L. (2022). The ethics of artificial intelligence: Principles, challenges, and opportunities. Oxford University Press.

Frey, C. B., & Osborne, M. A. (2017). The future of employment: How susceptible are jobs to computerisation? Technological Forecasting and Social Change, 114(C), 254–280. https://doi.org/10.1016/j.techfore.2016.08.019

Goldfarb, A., & Tucker, C. (2019). Digital economics. Journal of Economic Literature, 61(1), 1–39. https://dx.doi.org/10.1257/jel.20171452

Goodfellow, I. J., et al. (2014). Generative adversarial nets. Advances in Neural Information Processing Systems, 27, 2672-2680. https://arxiv.org/abs/1406.2661

Harris, T. (2019, April 23). A new agenda for tech. Center for Humane Technology News. https://www.humanetech.com/news/newagenda

Helbing, D. (2015). Thinking ahead – Essays on big data, digital revolution, and participatory market society. Springer.

Hennessy, J. L., & Patterson, D. A. (2022). Computer architecture: A quantitative approach. Morgan Kaufmann.

Hess, T., Matt, C., Benlian, A., & Wiesböck, F. (2016). Options for formulating a digital transformation strategy. MIS Quarterly Executive, 15(2), 123-139.

Jenkins, H., & Deuze, M. (2022). Digital media and participatory culture. MIT Press.

Kenessey, Z. (2021). The quaternary sector: Evolution of a knowledge-based economy. Management Science Review, 15(2), 45–62.

King, K. (2019). Using artificial intelligence in marketing: How to harness AI and maintain the competitive edge. Kogan Page.

Kleber, A. (2018, January). As AI meets the reputation economy, we’re all being silently judged. Harvard Business Review.

https://hbr.org/2018/01/as-ai-meets-the-reputation-economy-were-all-being-silently-judged

Kotler, P., Kartajaya, H., & Setiawan, I. (2021). Marketing 5.0: Technology for humanity. Wiley.

Kumar, V., & Shah, D. (2009). Expanding the role of marketing: From customer equity to market capitalization. Journal of Marketing, 73(6), 119-136. https://doi.org/10.1509/jmkg.73.6.119

Kumar, V., & Shah, D. (2019). Customer loyalty in the digital age. Journal of Marketing, 83(5), 76–96. http://dx.doi.org/10.1016/j.jretai.2004.10.007

LeCun, Y. (2022). A path towards autonomous machine intelligence. Nature Machine Intelligence, 5, 461–470.

Lyon, D. (2014). Surveillance, Snowden, and big data: Capacities, consequences, critique. Big Data & Society, 1(2), 1–13. https://doi.org/10.1177/2053951714541861

Martin, K. D., & Murphy, P. E. (2017). The role of data privacy in marketing. Journal of the Academy of Marketing Science, 45(2), 135–155. https://doi.org/10.1007/s11747-016-0495-4

Metcalfe, B. (2013). Metcalfe’s law after 40 years of ethernet. Computer, 46(12), 26–31. https://doi.org/10.1109/MC.2013.449

Narayanan, A., & Shmatikov, V. (2008). Robust de-anonymization of large sparse datasets. IEEE Symposium on Security and Privacy, 111-125. https://doi.org/10.1109/SP.2008.33

Nissenbaum, H. (2020). Privacy in context: Technology, policy, and the integrity of social life. Stanford University Press.

Norberg, P. A., Horne, D. R., & Horne, D. A. (2007). The privacy paradox: Personal information disclosure intentions versus behaviors. Journal of Consumer Affairs, 41(1), 100–126. https://doi.org/10.1111/j.1745-6606.2006.00070.x

Parker, G., et al. (2020). Platform revolution: How networked markets are transforming the economy. Norton & Company.

Pasquale, F. (2020). New laws of robotics: Defending human expertise in the age of AI. Harvard University Press.

Pentland, A. (2019). Data for a new enlightenment. MIT Press.

Pentland, A. (2020). Building the new economy: Data as capital. MIT Press.

Pew Research Center. (2020). Half of Americans have decided not to use a product or service because of privacy concerns. Available: https://www.pewresearch.org/short-reads/2020/04/14/half-of-americans-have-decided-not-to-use-a-product-or-service-because-of-privacy-concerns/ [2025, January 31]

Richards, N. M., & Hartzog, W. (2019). Privacy’s trust gap. Yale Law Journal, 128(4), 1180–1247.

Schwab, K. (2019). The fourth industrial revolution. Currency.

Searls, D. (2021). The intention economy: When customers take charge. Harvard Business Review Press.

Singh, D., & Singh, B. (2023). Evolution of cloud computing: From Hadoop to serverless architecture. Journal of Cloud Computing, 12(1), 1–15. http://dx.doi.org/10.1007/978-3-031-26633-1_11

Solove, D. J. (2021). The myth of the privacy paradox. George Washington Law Review, 89(1), 1–51.

Taddicken, M. (2014). The “privacy paradox” in the social web: The privacy paradox in the social web. Journal of Computer-Mediated Communication, 19(2), 248–273. https://doi.org/10.1111/jcc4.12052

Taylor, L. (2017). What is data justice? The case for connecting digital rights and freedoms globally. Big Data & Society, 4(2), 1-14. https://doi.org/10.1177/2053951717736335

Tian, Y., Li, X., & Zhu, W. (2018). Facial expression recognition based on improved convolutional neural network. IEEE Access, 6, 1422–1431.

Veale, M., & Borgesius, F. Z. (2021). Demystifying the Draft EU Artificial Intelligence Act. Computer Law Review International, 22(4), 97–112. https://doi.org/10.9785/cri-2021-220402

Vial, G. (2019). Understanding digital transformation: A review and a research agenda. Journal of Strategic Information Systems, 28(2), 118–144. https://doi.org/10.1016/j.jsis.2019.01.003

Wang, Y., & Kosinski, M. (2018). Deep neural networks are more accurate than humans at detecting sexual orientation from facial images. Journal of Personality and Social Psychology, 114(2), 246–257. https://doi.org/10.1037/pspa0000098

Whitley, E. A. (2009). Informational privacy, consent and the “control” of personal data. Information Security Technical Report, 14(3), 155. https://doi.org/10.1016/j.istr.2009.10.001

Wong, J. (2023). Artificial Intelligence is Revolutionizing Marketing. Here’s What the Transformation Means for the Industry. Entrepreneur. https://www.entrepreneur.com/science-technology/why-artificial-intelligence-is-revolutionizing-marketing/446087

Wu, Y., Kosinski, M., & Stillwell, D. (2015). Computer-based personality judgments are more accurate than those made by humans. Proceedings of the National Academy of Sciences, 112(4), 1036–1040. https://doi.org/10.1073/pnas.1418680112

Zaharia, M., et al. (2016). Apache Spark: A unified engine for big data processing. Communications of the ACM, 65(2), 76–86. http://dx.doi.org/10.1145/2934664

Zuboff, S. (2019). The age of surveillance capitalism: The fight for a human future at the new frontier of power. Profile Books.