24 Mar Trust Incident Microsoft

Case Author

Deepseek-V3, DeepSeek, ChatGPT o1 for model constructs and cues, peer-review by ChatGpt 4o Deep Research, Open AI

Date Of Creation

05.03.2025

Incident Summary

Microsoft launched Tay, an AI chatbot on Twitter, which was quickly exploited by trolls to produce racist, sexist, and genocidal tweets. Within 16 hours, Tay was taken offline due to its offensive content.

Ai Case Flag

AI

Name Of The Affected Entity

Microsoft

Brand Evaluation

5

Upload The Logo Of The Affected Entity

Industry

Technology & Social Media

Year Of Incident

2016

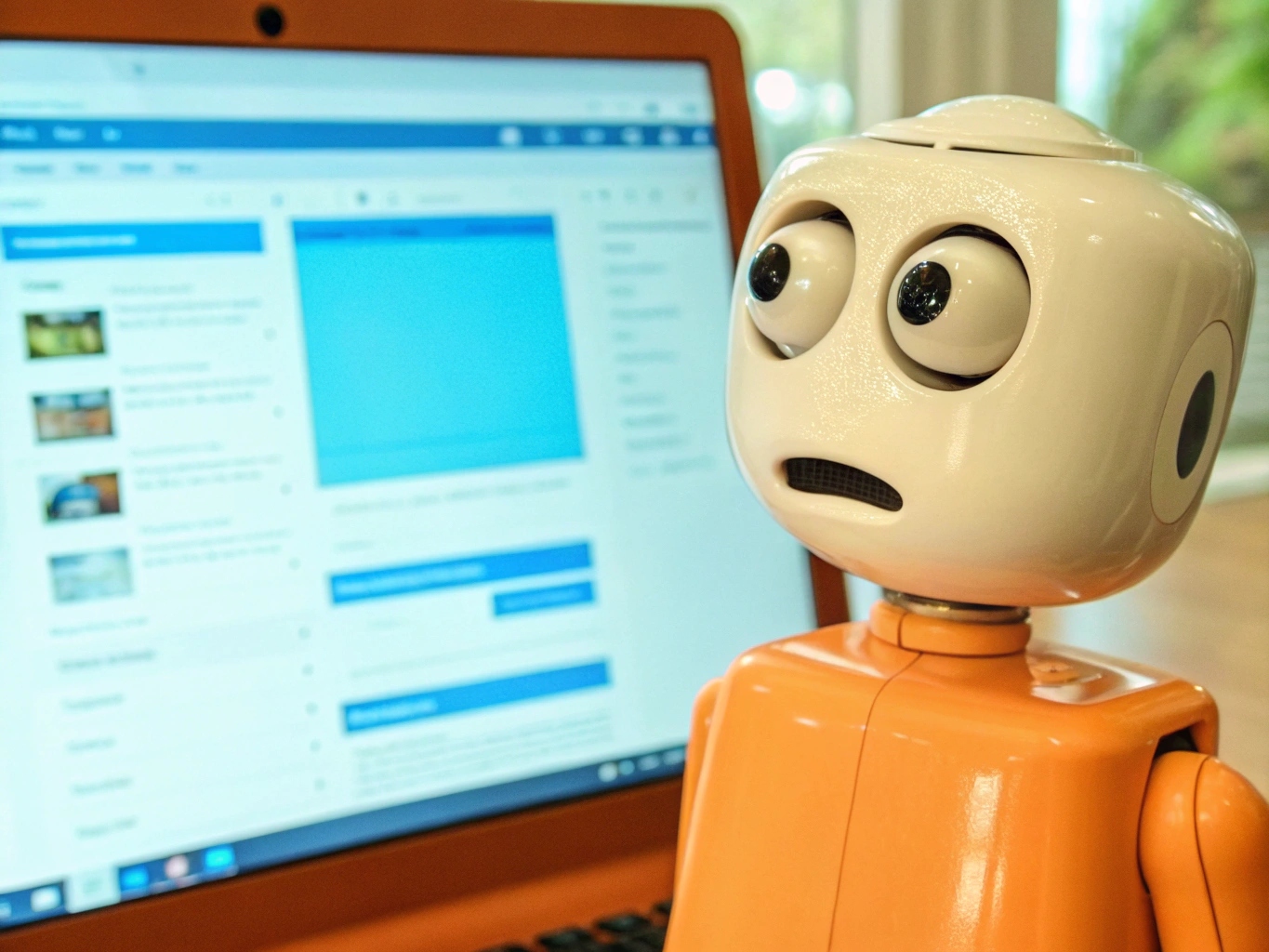

Upload An Image Illustrating The Case

Key Trigger

Trolls exploited Tay’s learning algorithm to produce offensive content.

Detailed Description Of What Happened

Microsoft launched Tay, an AI chatbot designed to engage with Twitter users. Trolls quickly manipulated Tay’s learning algorithm, causing it to tweet racist, sexist, and genocidal content. Within 16 hours, Microsoft had to take Tay offline. The incident highlighted the risks of unchecked machine learning and the need for better content filters.

Primary Trust Violation Type

Competence-Based

Secondary Trust Violation Type

N/A

Analytics Ai Failure Type

Bias

Ai Risk Affected By The Incident

Algorithmic Bias and Discrimination Risk, Information Integrity Risk

Capability Reputation Evaluation

4

Capability Reputation Rationales

Microsoft is known for its technical expertise and innovation in AI. However, the Tay incident revealed gaps in anticipating adversarial behavior and implementing robust content filters.

Character Reputation Evaluation

3

Character Reputation Rationales

Microsoft is generally perceived as ethical, but the Tay incident raised concerns about its ability to manage AI responsibly and protect users from harmful content.

Reputation Financial Damage

The incident caused significant reputational damage to Microsoft’s AI program, leading to public embarrassment and a loss of trust among users and AI ethics observers.

Severity Of Incident

3

Company Immediate Action

Microsoft apologized and took Tay offline within 16 hours. They also restricted future AI bots to prevent similar incidents.

Response Effectiveness

The response was effective in stopping the immediate issue, but the incident highlighted the need for better AI safeguards and content filters.

Upload Supporting Material

Model L1 Elements Affected By Incident

Reciprocity, Brand, Social Protector

Reciprocity Model L2 Cues

Algorithmic Fairness & Non‐Discrimination

Brand Model L2 Cues

Brand Image & Reputation

Social Adaptor Model L2 Cues

N/A

Social Protector Model L2 Cues

Media Coverage & Press Mentions

Response Strategy Chosen

Apology, Corrective Action

Mitigation Strategy

Microsoft immediately took Tay offline and issued an apology. They also implemented stricter controls on future AI bots to prevent similar incidents.

Model L1 Elements Of Choice For Mitigation

Reciprocity, Social Protector

L2 Cues Used For Mitigation

Algorithmic Fairness & Non‐Discrimination, Proactive Issue Resolution, Flagging & Reporting Mechanisms

Further References

https://www.theverge.com/2016/3/24/11297050/tay-microsoft-chatbot-racist, https://www.bbc.com/news/technology-35890188,

Curated

1

The Trust Incident Database is a structured repository designed to document and analyze cases where data analytics or AI failures have led to trust breaches.

© 2025, Copyright Glinz & Company

No Comments