Chapter 1: Learn how pervasive consumer concerns about data privacy, unethical ad-driven business models, and the imbalance of power in digital interactions highlight the need for trust-building through transparency and regulation.

Chapter 8: Learn how AI’s rapid advancement and widespread adoption present both opportunities and challenges, requiring trust and ethical implementation for responsible deployment. Key concerns include privacy, accountability, transparency, bias, and regulatory adaptation, emphasizing the need for robust governance frameworks, explainable AI, and stakeholder trust to ensure AI’s positive societal impact.

Content in this chapter

As digital transformation precedes and AI systems become more prevalent in society, understanding and fostering individuals’ willingness to share their data becomes increasingly critical for ensuring both technological progress and individual privacy protection. This balance between innovation and privacy protection represents one of the central challenges in developing trustworthy AI systems.

The Evolving Landscape of Data Sharing Decisions

In the age of digital transformation and artificial intelligence, understanding individuals’ willingness to share data has become critically important for both businesses and society. WTS can be defined as an individual’s propensity to disclose personal information to organizations in exchange for perceived benefits while considering potential risks and contextual factors (Ackermann et al., 2022). This complex decision-making process is deeply intertwined with concepts of privacy, trust, and reciprocity in the digital economy.

Willingness to share data (WTS) is a context-dependent behavioural disposition representing an individual’s propensity to disclose personal information to organizations or systems. This disposition is particularly relevant in the context of AI systems, where data-sharing decisions directly impact both the development of AI capabilities and individuals’ privacy protection (Acquisti et al., 2015).

Online consumer privacy concern and willingness to provide personal data on the internet... captures both the negative side of consumers' perceptions and they cannot explain why consumers are sometimes willing to disclose personal information, although they perceive certain risks of doing so (Miltgen, 2009: 574).

Recent research has established that WTS is not merely a binary choice but rather a multifaceted construct influenced by various contextual determinants (Glinz & Smotra, 2020). The academic literature has identified several key factors that shape individuals’ WTS decisions. These include the type of data requested, the purpose of data collection, the industry sector of the collecting organization offered compensation, and the degree of anonymity (Ackermann et al., 2022; Acquisti et al., 2015).

Trust emerges as a fundamental enabler of data-sharing behaviours. As Mayer et al. (1995) established in their seminal work, trust represents “the willingness of a party to be vulnerable to the actions of another party.” This vulnerability is particularly relevant in personal data sharing, where individuals face risks such as unauthorized data usage or inference – the process of deducing unrevealed information from authorized data (Motahari et al., 2009). The relevance of WTS has grown significantly with the advent of AI systems that rely heavily on personal data for training and operation. These systems promise enhanced services and personalization but simultaneously raise concerns about privacy and data protection (Kosinski et al., 2013). We discussed the “privacy paradox” extensively in Chapter 1. This paradox illustrates the discrepancy between stated privacy preferences and actual sharing behaviour (Norberg et al., 2007).

Understanding WTS has become crucial for several reasons. First, the increasing complexity of digital systems requires more decentralized control mechanisms, making user control over personal data essential (Helbing, 2015). Second, stricter privacy regulations like GDPR have made explicit user consent mandatory for data collection and processing. Third, the success of AI-driven services depends heavily on access to quality personal data, creating understanding and fostering WTS crucial for technological advancement.

WTS is characterized by…

Ackermann et al.’s (2022) research demonstrated that people’s willingness to share personal data is heavily influenced by contextual characteristics. Across all scenarios studied, only 19% of participants were willing to share their data, though this percentage varied significantly depending on the specific context. A notable finding was that participants were almost ten times more likely to share payment behaviour data than social communication data.

The study revealed that situational characteristics affect WTS decisions not just individually but through complex interactions. The most significant interaction occurred between a company’s industry sector and the type of data requested. The researchers found that people were more willing to share their information when there was a logical match between a company’s core business and the type of data requested. For example, individuals were more inclined to share health and fitness data with health insurers rather than with retailers, banks, or telecom providers. Similarly, people were likelier to share purchase behaviour data with retailers, financial data with banks, and online media consumption data with telecom providers.

Another crucial pattern emerged regarding data sensitivity. The research showed that when people perceived data as highly sensitive, other factors, such as compensation or promises of anonymity, had minimal impact on their willingness to share. However, for data types perceived as less sensitive, factors such as compensation offers and the level of personal identification had considerable influence on sharing decisions.

Our theory argues that context sensitivity as a moderator and individuals’ salient attributes in terms of personality types and privacy concern are critical factors impacting trust and the willingness to disclose personal information (Bansal et al., 2016: 1).

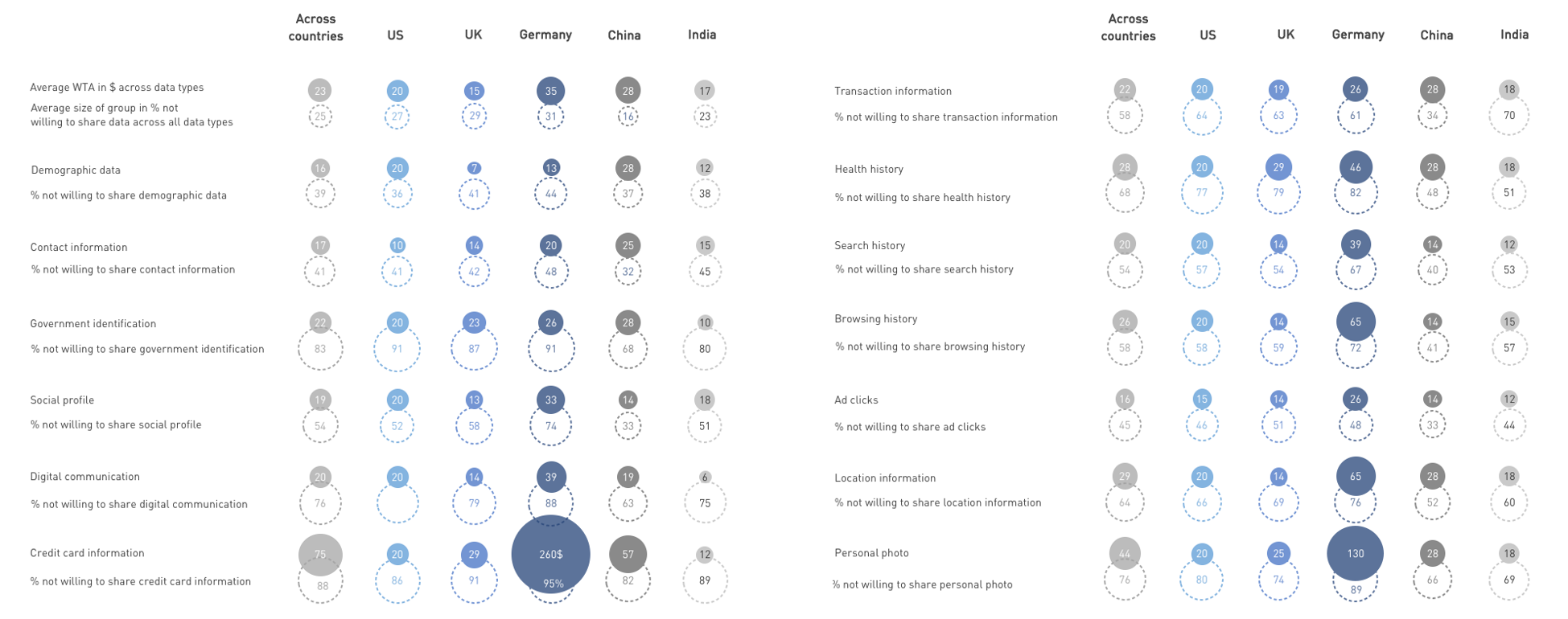

Most research indicates that WTS varies significantly across cultural contexts and data types (Morey et al., 2015; Bansal et al., 2016; Ackermann et al., 2022). For instance, financial and health-related data are generally considered more sensitive than demographic information, resulting in lower sharing propensity. Moreover, the relationship between compensation and WTS is not straightforward – monetary incentives often fail to overcome privacy concerns (Winegar & Sunstein, 2019).

The emergence of new technologies and data governance models, such as personal data stores (PODs) and blockchain-based solutions, adds another layer of complexity to WTS research. These innovations promise users greater control over their data but require new frameworks for understanding sharing behaviours (Berners-Lee, 2018).

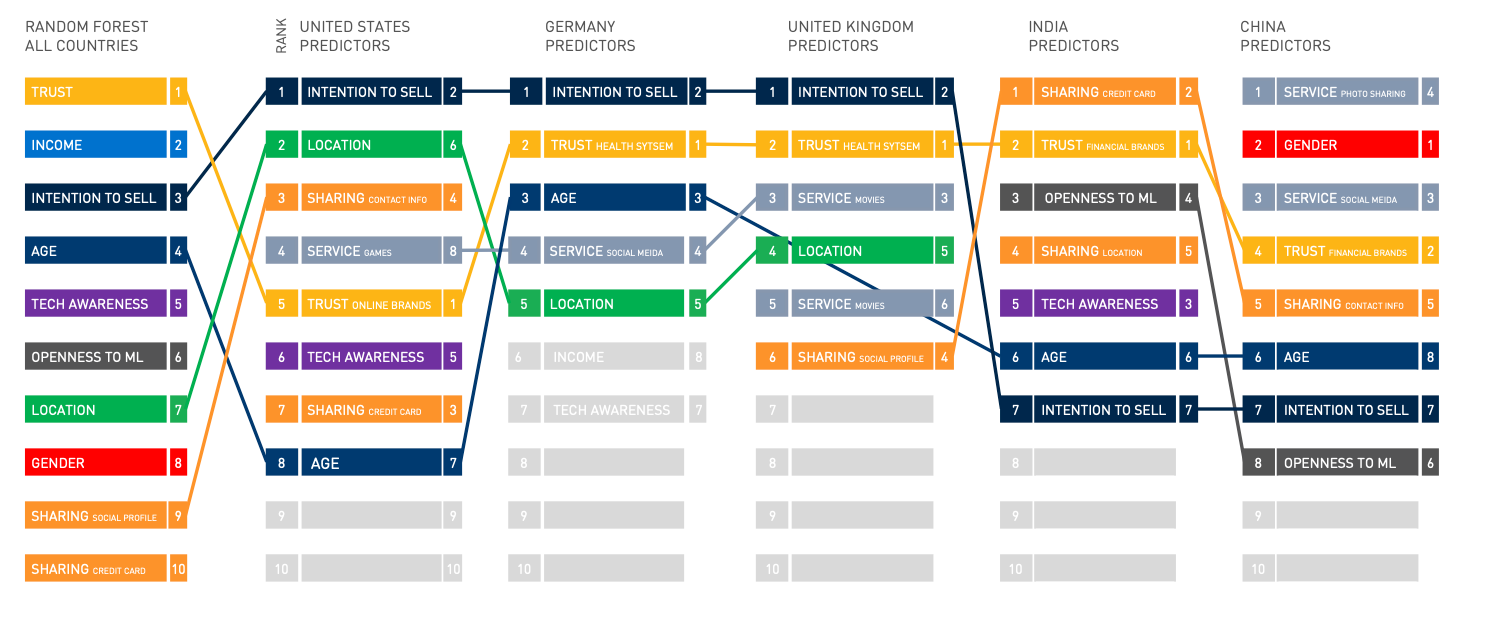

In this study, conducted in 2020 at the University of California, Berkeley, we explore the complex determinants of users’ willingness to share personal data. Using a comprehensive international survey dataset from the service design company Frog, we developed and compared machine learning models to predict privacy-sharing behaviour. Our hybrid machine learning approach integrates wrapper and embedded methods to identify the most relevant predictive features.

Our findings reveal that the factors influencing personal data sharing are highly individualized and strongly influenced by contextual elements such as geography and cultural background. Critically, the research demonstrates that conventional strategies like monetary incentives are ineffective in understanding or predicting data-sharing behaviours. The study underscores that individuals often make seemingly irrational decisions regarding personal data. By providing a nuanced understanding of the underlying drivers of data-sharing willingness, we offer valuable insights for businesses and policymakers seeking to develop more sophisticated and context-aware approaches to data privacy.

Cite this paper as (APA 7th version): “Glinz, D., & Smotra, N. (2020). Predicting the Willingness to Share Personal Data in the Context of Digital Trust. iceberg.digital – a framework for building trust. https://doi.org/10.13140/RG.2.2.34710.05448

Method & Mechanics

Using the Willingness to Accept as a proxy.

Privacy decisions in everyday life typically manifest in two distinct scenarios (Acquisti et al., 2013). First, consumers are frequently asked to disclose personal information in exchange for certain benefits. Alternatively, they encounter opportunities to pay for preventing personal data disclosure. Drawing from economic terminology, our research centres on willingness to accept (WTA): the minimum compensation an individual would require to part with personal data protection they already possess. This differs from willingness to pay (WTP), which represents the maximum amount a person would spend to acquire data protection they do not currently own.

Empirical research reveals a critical nuance: WTA consistently exceeds WTP, primarily driven by psychological phenomena such as loss aversion and the endowment effect (Kahneman & Tversky, 1979; Thaler, 1980). This asymmetry highlights the complexity of valuing personal data beyond traditional economic metrics.

Our approach deliberately moves away from absolute monetary measurements, instead focusing on carefully distinguishing between WTA and WTP. This methodology acknowledges that conventional economic value assessments can be misleading when applied to data privacy contexts.

Binning process.

We use a WTA proxy variable by categorizing respondents into two groups based on their stated minimum acceptable price for data sharing. Specifically, we compare each respondent’s threshold to the population average to determine whether their willingness to share data is relatively higher or lower. Respondents are then assigned to either a “higher” (top 50%) or “lower” (bottom 50%) WTA category.

This binning process is conducted separately for each country to account for differences in purchasing power. We refer to the resulting aggregated variable as WTA_Construct. The data’s granularity also allows us to calculate these bins across 13 different data types.

We primarily use the average WTA cost across all data types to simplify the analysis and focus on robust predictors of data-sharing willingness. More details on the construction of this target variable can be found in Section 3.1. of the papaer

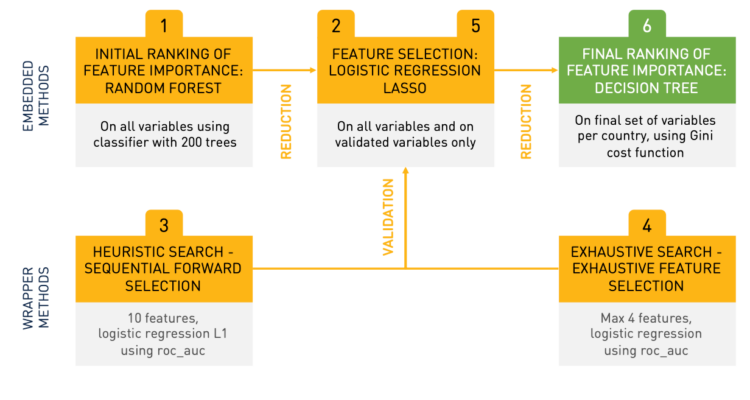

Feature selection and ranking.

The primary goal of this study is to identify the contextual factors that influence individuals’ willingness to relinquish their privacy. By constructing a robust dependent variable, we have mitigated the risk of being misled by the privacy paradox. To uncover the most relevant features in this domain, we employ a hybrid machine learning approach that integrates both embedded and wrapper methods, as illustrated in the figure below.

The study employs a hybrid machine learning approach combining embedded and wrapper methods to identify the most relevant contextual factors influencing individuals’ willingness to share data. The process consists of five key steps:

Message

1 . Human-bounded rationality affects data-sharing decisions. The research suggests that privacy valuations tend to be bimodally distributed – some people share data for small amounts while others demand excessive compensation. We allowed respondents to refuse data access completely and added a “penalty price” for no-share responses to address this. We found median willingness-to-accept (WTA) values varied by country ($35 in Germany, $15 in the UK, $17 in India) and data type, with sensitive personal information commanding higher prices.

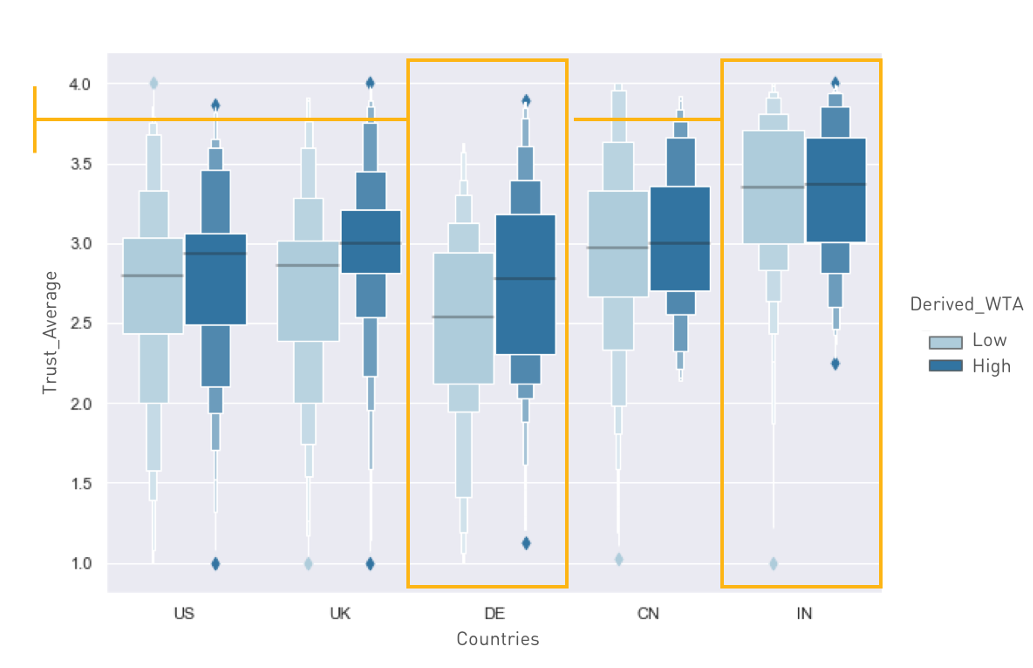

2. Trust reduces complexity in decision-making. In countries with higher institutional trust, there is less distinction between groups with high and low willingness to share data. The research also examined how the intention to sell data to third parties affected sharing decisions, finding that this acted as a “hygiene factor”—while its presence doesn’t necessarily motivate sharing, its absence can prevent it.

Click here to access the full-resolution picture

3. Willingness to share personal data is strongly tied to institutional trust levels, which vary significantly by country. Germany showed much lower institutional trust than China or India. The odds of being in the high willingness-to-share group decreased by 98.6% for German respondents but increased by 90% for Chinese respondents. This highlights the importance of considering cultural context and social norms in data protection approaches rather than treating data as a commodity.

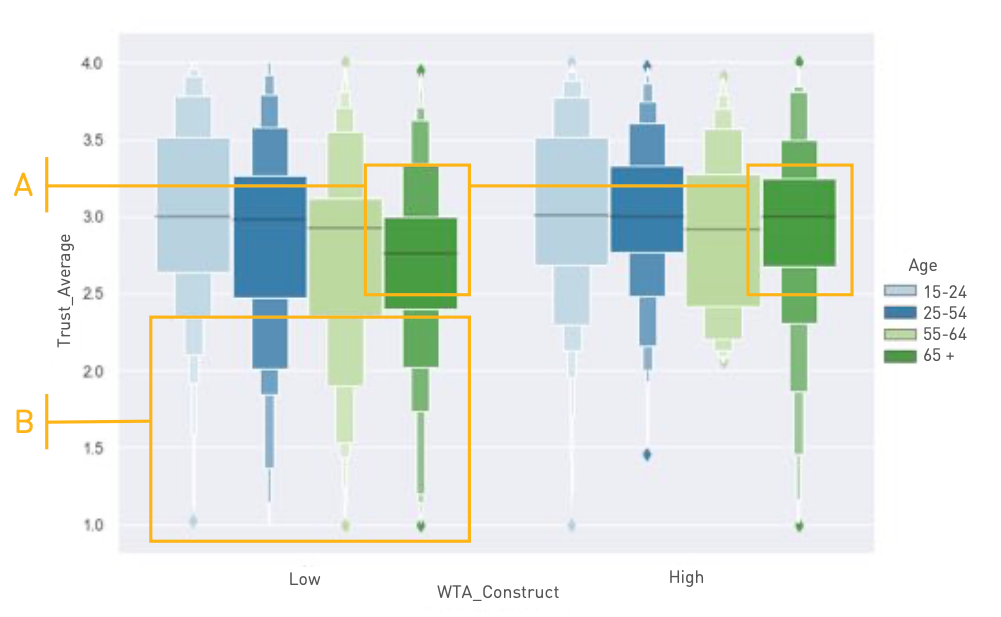

Simple visualisation techniques are invaluable in assessing data quality and uncovering initial research insights. Our analysis of trust across different age groups reveals nuanced patterns of data-sharing behaviours.

A particularly intriguing observation emerges in the relationship between trust and age. In the group that is less likely to share data, average trust consistently declines with age (see marker A in the figure). Conversely, trust levels remain relatively stable in the group that is more willing to share personal information. Notably, Digital Immigrants (individuals aged 65 and above) who are more willing to share data exhibit elevated trust levels compared to their less-sharing counterparts. These findings provide preliminary evidence that generalized trust can significantly facilitate data sharing. However, the group with lower data-sharing inclination displays considerable variance in trusting beliefs, particularly among middle-aged segments (see marker B in the figure).

Critical to our interpretation is the recognition that these patterns are not uniform across different countries. For instance, the observed age-related trust pattern is pronounced in Germany but becomes nearly imperceptible when examining data from the United States.

Our analysis of trust and data-sharing behaviours across different cultural contexts reveals significant geographical variations. Notably, respondents from the United States, Britain, and Germany, in particular, demonstrate a more stringent approach to data sharing (compare the distance of the small, horizontal black lines). They require substantially higher levels of trust before disclosing personal information.

In contrast, individuals from China and India appear less constrained by trust considerations when sharing personal data. This divergence may stem from multiple factors, including:

- Variations in trust variable construction

- The persistent “privacy paradox” phenomenon

- Distinct cultural attitudes towards data privacy and institutional trust

In countries like India, which exhibit high baseline trust levels, individuals might paradoxically require additional incentives to transform their trusting beliefs into concrete actions. This nuanced finding underscores the complex interplay between cultural background, trust, and personal data-sharing behaviours.

We employ a single decision tree to rank individual predictors and analyze each node’s contribution to the classification problem. This non-parametric supervised learning method is intuitive and interpretable, mirroring human decision-making processes. Additionally, the results can be visualized for further clarity.

We assess the quality of splits using Gini Impurity, which quantifies the probability of incorrect classification when a new instance is assigned a label based on the dataset’s class distribution. A perfect split results in a Gini Impurity of zero, meaning the feature with the lowest Gini Impurity is chosen for the next node split. Beyond understanding how multiple features collectively shape outcomes, we aim to determine how a single feature influences decision-making at specific points.

References Chapter 5:

Ackermann, K. A., Burkhalter, L., Mildenberger, T., Frey, M., & Bearth, A. (2022). Willingness to share data: Contextual determinants of consumers’ decisions to share private data with companies. Journal of Consumer Behaviour, 21(2), 375-386.

Acquisti, A., Brandimarte, L., & Loewenstein, G. (2015). Privacy and human behavior in the age of information. Science, 347(6221), 509-514.

Acquisti, A., John, L.K., Loewenstein, G. (2013). What Is Privacy Worth? In: The Journal of Legal Studies, 42(2): 249-274.

Bansal, G., Zahedi, F. M., & Gefen, D. (2016). Do context and personality matter? Trust and privacy concerns in disclosing private information online. Information & Management, 53(1), 1-21. http://dx.doi.org/10.1016/j.im.2015.08.001

Berners-Lee, T. (2018). One small step for the web… Medium. Available: https://medium.com/@timberners_lee/one-small-step-for-the-web-87f92217d085 [2025, January 31]

Dinev, T., & Hart, P. (2006). An extended privacy calculus model for e-commerce transactions. Information Systems Research, 17(1), 61-80.

Glinz, D., & Smotra, N. (2020). Predicting the Willingness to Share Personal Data in the Context of Digital Trust. iceberg.digital – a framework for building trust. Available: https://iceberg.digital/willingness-to-share-data/ [2025, January 31]

Helbing, D. (2015). Thinking ahead – Essays on big data, digital revolution, and participatory market society. Springer.

Kahneman, D., Tversky, A. (1979). Prospect Theory: An Analysis of Decision under Risk. Econometrica 47, 263–92.

Kosinski, M., Stillwell, D., & Graepel, T. (2013). Private traits and attributes are predictable from digital records of human behavior. Proceedings of the National Academy of Sciences, 110(15), 5802-5805.

Mayer, R. C., Davis, J. H., & Schoorman, F. D. (1995). An integrative model of organizational trust. Academy of Management Review, 20(3), 709-734.

Miltgen, C.L. (2009). Online consumer privacy concern and willingness to provide personal data on the internet. International Journal of Networking and Virtual Organizations, 6(6), 574-603.

Morey, T., Forbath, T., & Schoop, A. (2015). Customer Data: Designing for Transparency and Trust. Harvard Business Review, 93(5), 96–105.

Motahari, S., Ziavras, S., Naaman, M., Ismail, M., & Jones, Q. (2009). Social inference risk modeling in mobile and social applications. In International Conference on Computational Science and Engineering. 3, 125-132. IEEE.

Norberg, P. A., Horne, D. R., & Horne, D. A. (2007). The privacy paradox: Personal information disclosure intentions versus behaviors. Journal of Consumer Affairs, 41(1), 100-126.

Thaler, R.H. (1980). Toward a Positive Theory of Consumer Choice. Journal of Economic Behavior and Organization 1:39–60.

Winegar, A. G., & Sunstein, C. R. (2019). How much is data privacy worth? A preliminary investigation. Journal of Consumer Policy, 42, 425-440.